Radeon Vega revealed: 5 things you need to know about AMD’s cutting-edge graphics cards

Image: Brad Chacos

Image: Brad Chacos“Wait for Vega.” For the past six months, that’s been the message from the Radeon faithful, as Nvidia’s beastly GeForce GTX 1070 and GTX 1080 stomped above AMD’s Radeon RX 400-series graphics cards.

While Nvidia’s powerful new 16nm Pascal GPU architecture scales all the way from the lowly $120 GTX 1050 to the mighty $1,200 GTX Titan X, AMD’s 14nm Polaris graphics are designed for more mainstream video cards, and the flagship Radeon RX 480 is no match for Nvidia’s higher-end brawlers. Thus “Wait for Vega” has become the rallying cry for AMD supporters with a thirst for face-melting gameplay—Vega being the codename of the new enthusiast-class 14nm Radeon graphics architecture teased on AMD roadmaps for early 2017.

Here’s a video of PCWorld’s Brad Chacos and Gordon Ung talking to Radeon boss Raja Koduri about Vega and FreeSync 2 for over 40 minutes at CES 2017.

Unfortunately, the wait will continue, as the new architecture won’t appear in shipping cards until sometime later in the first half of 2017. But at CES, Vega is becoming more than a mere codename: AMD is finally revealing some technical teases for Radeon’s performance-focused response to Nvidia’s titans, including how the new GPU intertwines graphics performance and memory architectures in radical new ways.

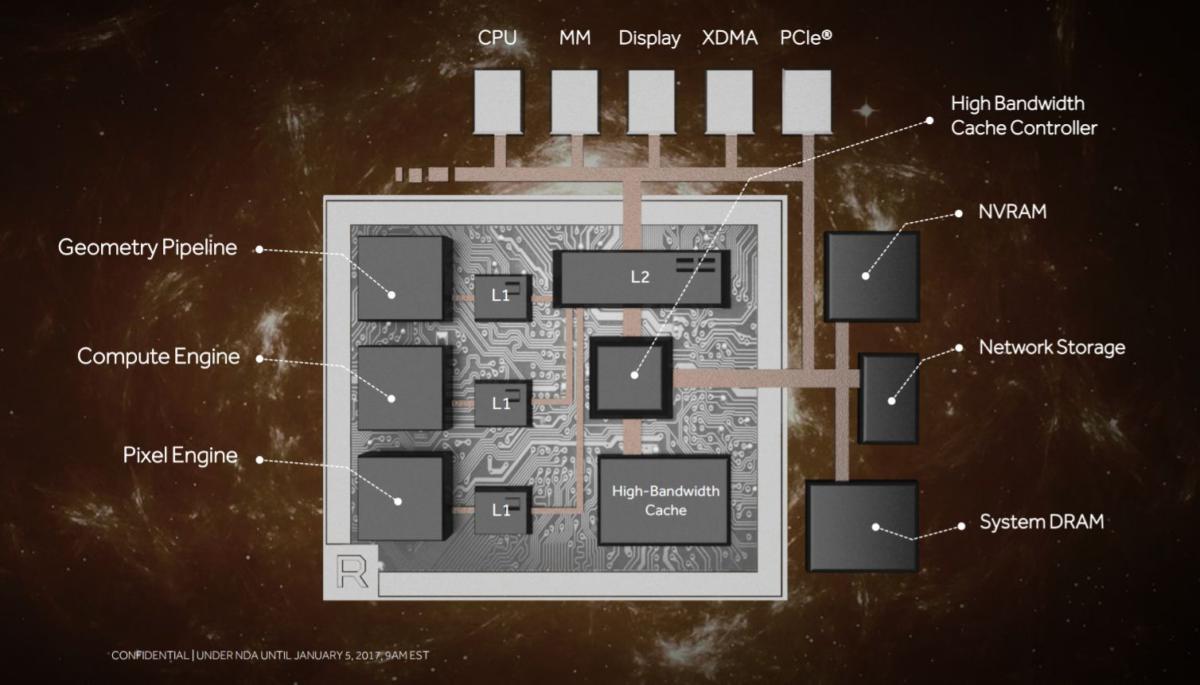

Before we dive in too deeply, here’s a high-level overview of the Vega technical architecture preview.

AMD

AMDA technical preview of AMD’s Radeon Vega graphics architecture.

All those words will become meaningful in time. Let’s start with what you want to hear about first.

1. Damn, it’s fast

Seriously.

In a preview shown to journalists and analysts in December, AMD played 2016’s sublime Doom on an early Radeon Vega 10 graphics card with everything cranked to Ultra at 4K resolution. Doom scales like a champ, but that’s hell on any graphics card: Even the GTX 1080 can’t hit a 60 frames per second average at those settings, per Techspot. Radeon Vega, meanwhile, floated between 60 and 70fps. Sure, it was running Vulkan—a graphics API that favors Radeon cards in Doom—rather than DirectX 11. But, hot damn, the demo was impressive.

A couple of other sightings in recent weeks confirm Vega’s speed. At the New Horizon livestream that introduced AMD’s Ryzen CPU to the world, the company showed Star Wars: Battlefront running on a PC that pairs Ryzen with Vega. The duo maxed out the 4K monitor’s 60Hz speed with everything cranked to Ultra. The GTX 1080, on the other hand, hits just shy of 50fps, Techspot’s testing shows.

Meanwhile, a since-deleted leak in the Ashes of the Singularity database in early December showed a GPU with the Device ID “687F:C1” surpassing many GTX 1080s in benchmark results. Here’s the twist: The Device ID shown in the frame rate overlay during AMD’s recent Vega preview with Doom confirmed that Vega 10 is indeed 687F:C1.

These numbers come with all sorts of caveats: Vega 10 isn’t in its final form yet, we don’t know whether the graphics card AMD teased is Vega’s beefiest incarnation, all three of those benchmarked games heavily favor Radeon, et cetera.

But all that said, Vega certainly looks competitive on the graphics performance front, partly because AMD designed Vega to work smarter, not just harder. “Moving the right data at the right time and working on it the right way,” was a major goal for the team, according to Mike Mantor, an AMD corporate fellow focused on graphics and parallel compute architecture—and a large part of that stems from tying graphics processing more closely with Vega’s radical memory design.

2. All about memory

When it comes to onboard memory, Vega is downright revolutionary—just like its predecessor.

AMD’s current high-end graphics cards, the Radeon Fury series, brought cutting-edge high-bandwidth memory to the world. Vega carries on the torch with improved next-gen HBM2, bolstered by a new “high-bandwidth cache controller” introduced by AMD.

Technical limitations limited the first generation of HBM to a mere 4GB of capacity, which in turn limited the Fury series to 4GB of onboard RAM. Thankfully, HBM’s raw speed hid that flaw in the vast majority of games, but now HBM2 tosses those shackles by the wayside. AMD hasn’t officially confirmed Vega’s capacity, but the overlay during the Doom demo revealed that particular graphics card packed 8GB of RAM. And that super-fast RAM is getting even faster, with AMD’s Joe Macri stating that HBM2 offers twice the bandwidth per pin of HBM1.

AMD

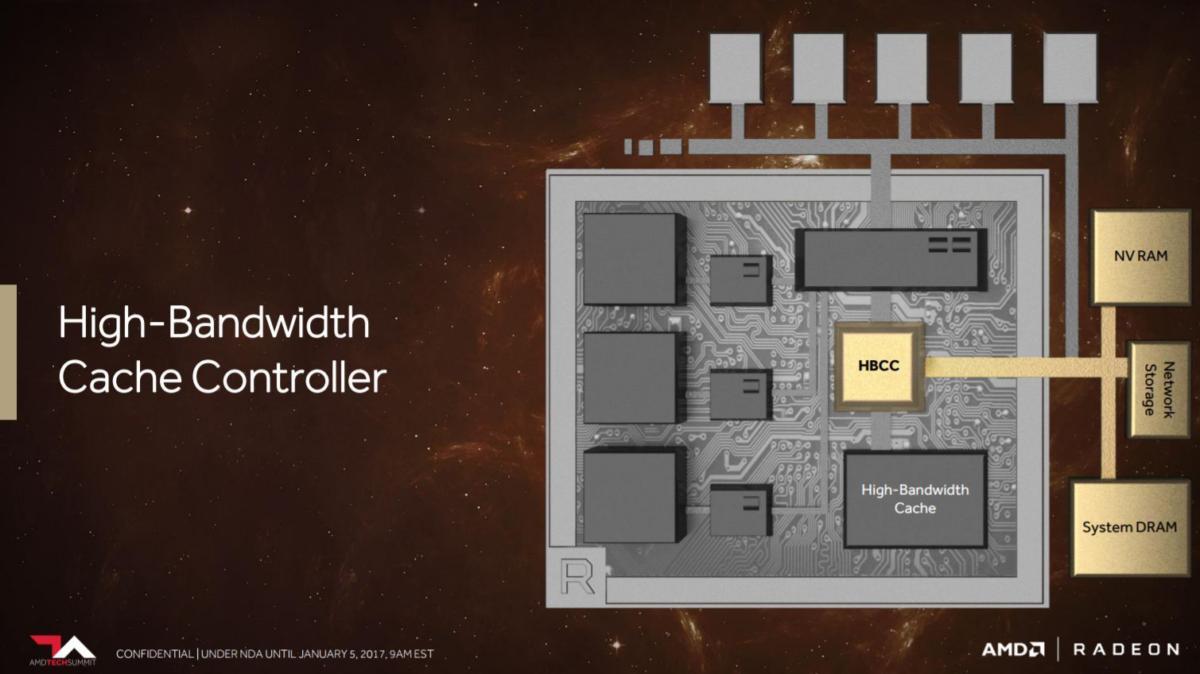

AMDVega’s high-bandwidth cache and cache controller unlock a world of memory potential.

But as it turns out, HBM was just the beginning. “It’s an evolutionary technology we can take through time, make it bigger, faster, make all these key improvements,” said Macri, a driving force behind HBM’s creation. Vega builds on HBM’s shoulders with the introduction of a new high-bandwidth cache and high-bandwidth cache controller, which combine to form what Radeon boss Raja Koduri calls “the world’s most scalable GPU memory architecture.”

AMD crafted Vega’s high-bandwidth memory architecture to help propel memory design forward in a world where sheer graphics performance keeps improving by leaps and bounds, but memory capacities and capabilities have remained relatively static. The HB cache replaces the graphics card’s traditional frame buffer, while the HB cache controller provides fine-grained control over data and supports a whopping 512 terabytes—not gigabytes, terabytes—of virtual address space. Vega’s HBM design can expand graphics memory beyond onboard RAM to a more heterogeneous memory system capable of managing several memory sources at once.

AMD

AMDThat’s likely to make its biggest impact in professional applications, such as the new Radeon Instinct lineup or the cutting-edge Radeon Pro SSG card that graft high-capacity NAND memory directly to its graphics processor. “This will allow us to connect terabytes of memory to the GPU,” David Watters, AMD’s head of Industry Alliances, told PCWorld when the Radeon Pro SSG was revealed, and this new cache and controller architecture designed for HBM’s blazing-fast speeds should supercharge those capabilities even more.

To drive the potential benefits home, AMD revealed a photorealistic recreation of Macri’s home living room. The 600GB scene normally takes hours to render, but the combination of Vega’s prowess and the new HBM2 architecture pumps it out in mere minutes. AMD even allowed journalists to move the camera around the room in real-time, albeit somewhat sluggishly. It was an eye-opening demo.

AMD

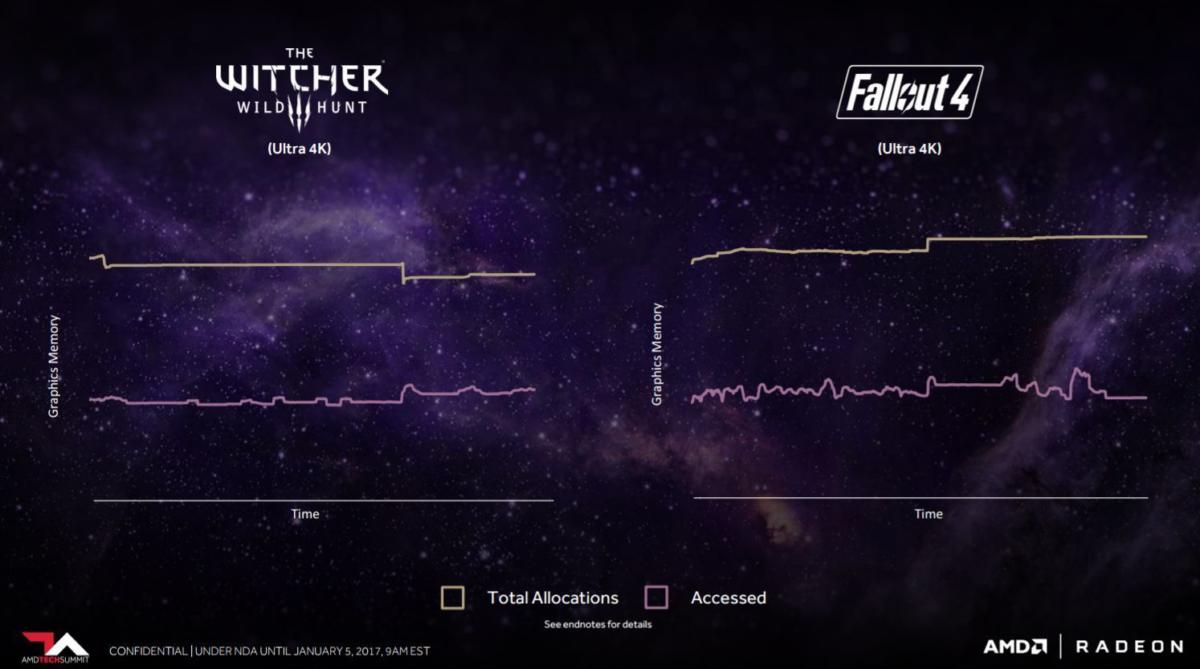

AMDKoduri stressed that games can also benefit from the high-bandwidth cache controller’s fine-grained, dynamic data management, citing Witcher 3 and Fallout 4, each of which actually use less than half of the memory allocated by the games when they’re running at 4K resolution. “And those are well-optimized games!” he said. Memory demands are only getting greedier in high-profile games, and doubly so at bleeding-edge resolutions. Here’s hoping that the HB cache’s finer controls paired with HBM’s sheer speed—and other tweaks we’ll discuss later in this article—alleviates that somewhat.

AMD also says that future generations of games could take advantage of high-bandwidth memory design to upload large data sets directly to the graphics processor, rather than handling it with a more hands-on approach as done today.

3. Efficient pipeline management

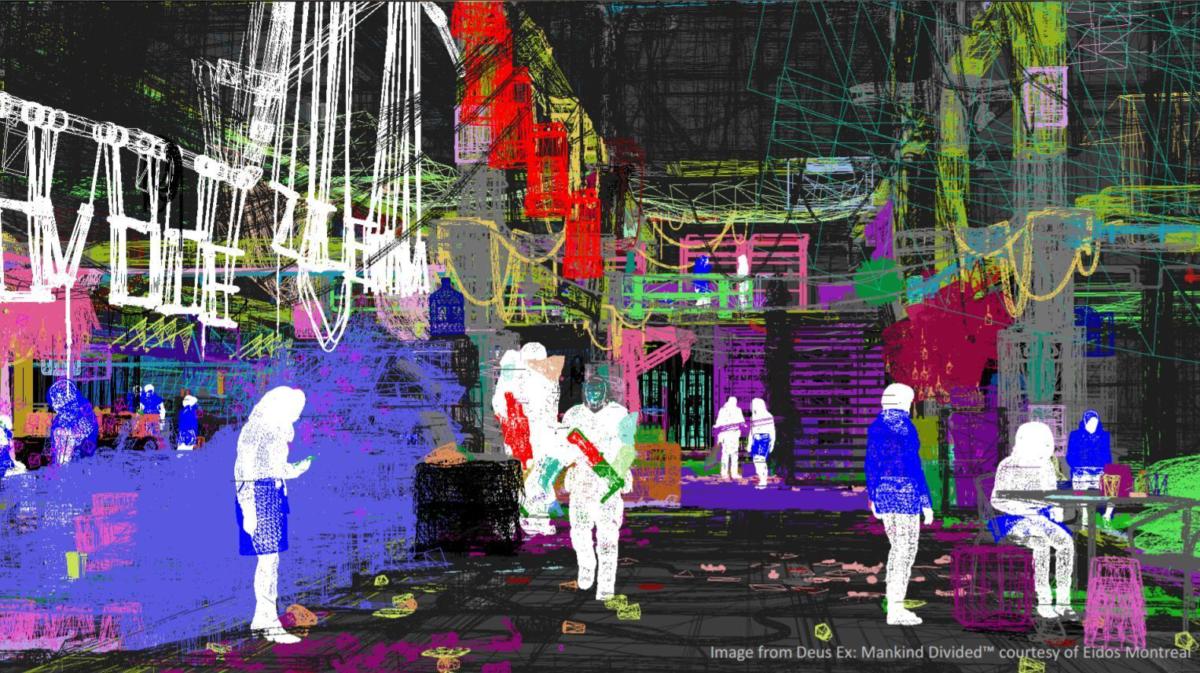

The way graphics cards render games isn’t very efficient. Case in point: the below scene from Deus Ex: Mankind Divided. It packs in a whopping 220 million polygons, according to Koduri, but only 2 million or so are actually visible to the player. Enter Vega’s new programmable geometry pipeline.

AMD

AMD AMD

AMDRendering a scene is a multi-step process, with graphics cards processing vertex shaders before passing the information on to the geometry engine for additional work. Vega speeds things up with the help of primitive shaders that quickly identify the polygons that aren’t visible to players so that the geometry engine doesn’t waste time on them. Yay, efficiency!

Vega also blazes through information at over twice the peak throughput of its predecessors, and includes a new “Intelligent Workgroup Distributor” to improve task load balancing from the very beginning of the pipeline.

AMD

AMDVega’s Primitive Shaders.

These tweaks drive home how AMD’s infiltration in consoles can benefit PC gamers, too. The inspiration for the load balancing tweaks comes from console developers used to working “closer to the metal” than PC developers, who highlighted it as a potential area for improvement for AMD, Raja Koduri says.

4. Right task, right time

AMD designed Vega to “smartly schedule past the work that doesn’t have to be done,” according to Mike Mantor. The final tidbits made public by the company drive that home.

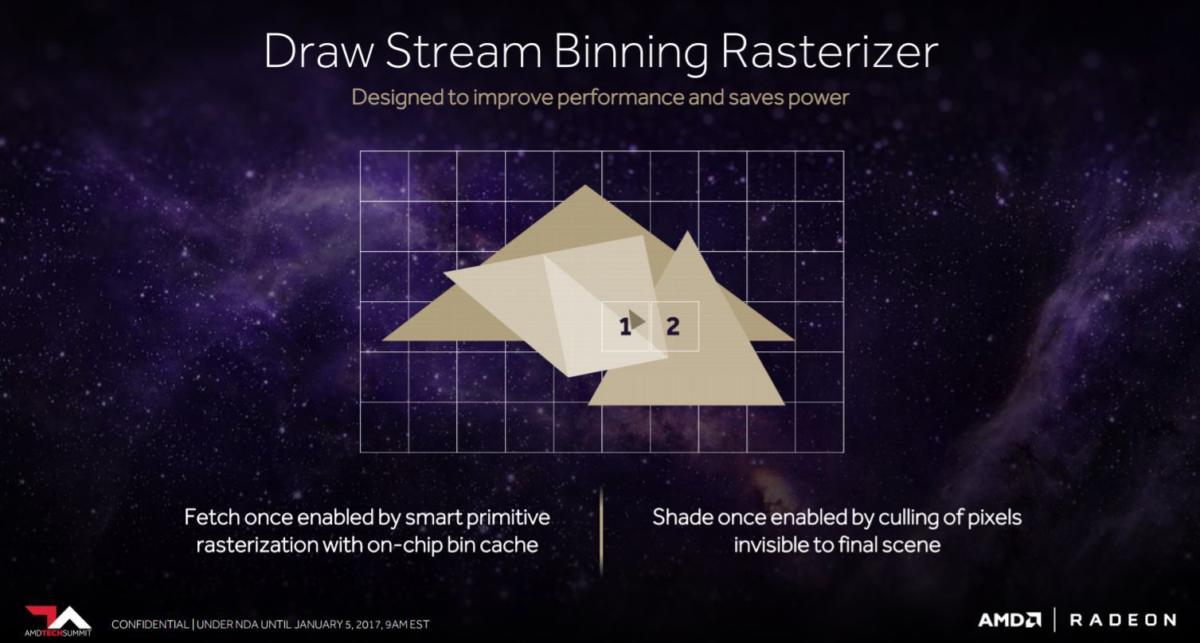

Vega continues AMD’s multi-year push to reduce memory bandwidth consumption (a quest that Nvidia’s also embarked upon). Its next-gen pixel engine includes a “draw stream binning rasterizer” that improves performance and saves power by teaming with the high-bandwidth cache controller to more efficiently process a scene. After the geometry engine performs its (already reduced amount of) work, Vega identifies overlapping pixels that won’t be seen by the user and thus don’t need to be rendered. The GPU then discards those pixels rather than wasting time rendering them. The draw stream binning rasterizer’s design “lets us visit a pixel to be rendered only once,” according to Mantor.

AMD

AMDClever!

The revamped Vega architecture also now feeds render back-ends from the pixel engine into the larger, shared L2 cache, rather than pumping them directly into the memory controller. AMD says that should help improve performance in GPU compute applications that rely on deferred shading. (For a fine overview on the topic, check out this ExtremeTech article on how L1 and L2 caches work.)

5. Next-gen compute engine

AMD

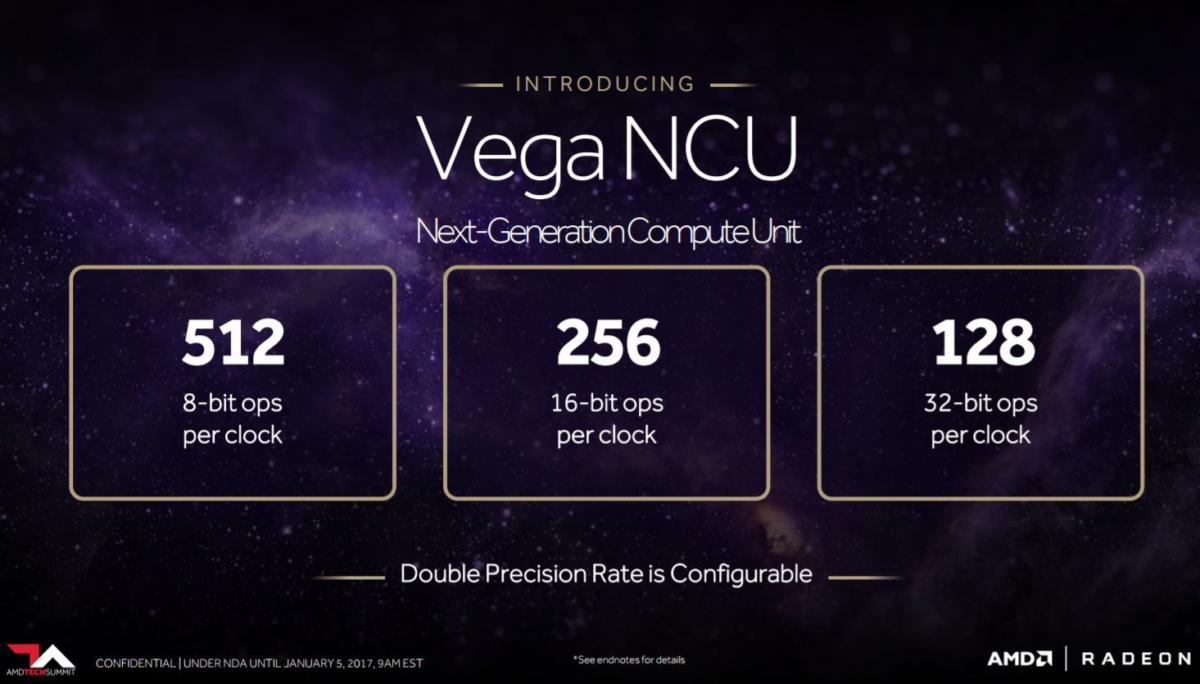

AMDFinally, AMD teased Vega’s “Next-gen compute engine,” which is capable of 512 8-bit operations per clock, 256 16-bit operations per clock, or 128 32-bit operations per clock. The 8- and 16-bit ops mostly matter for machine learning, computer vision, and other GPU compute tasks, though Koduri says the 16-bit ops can come in handy for certain gaming tasks that require less stringent accuracy as well. (The AMD-powered PlayStation 4 Pro also supports 256 16-bit operations per clock.)

AMD

AMDVega’s New Compute Unit can perform two 16-bit ops at once.

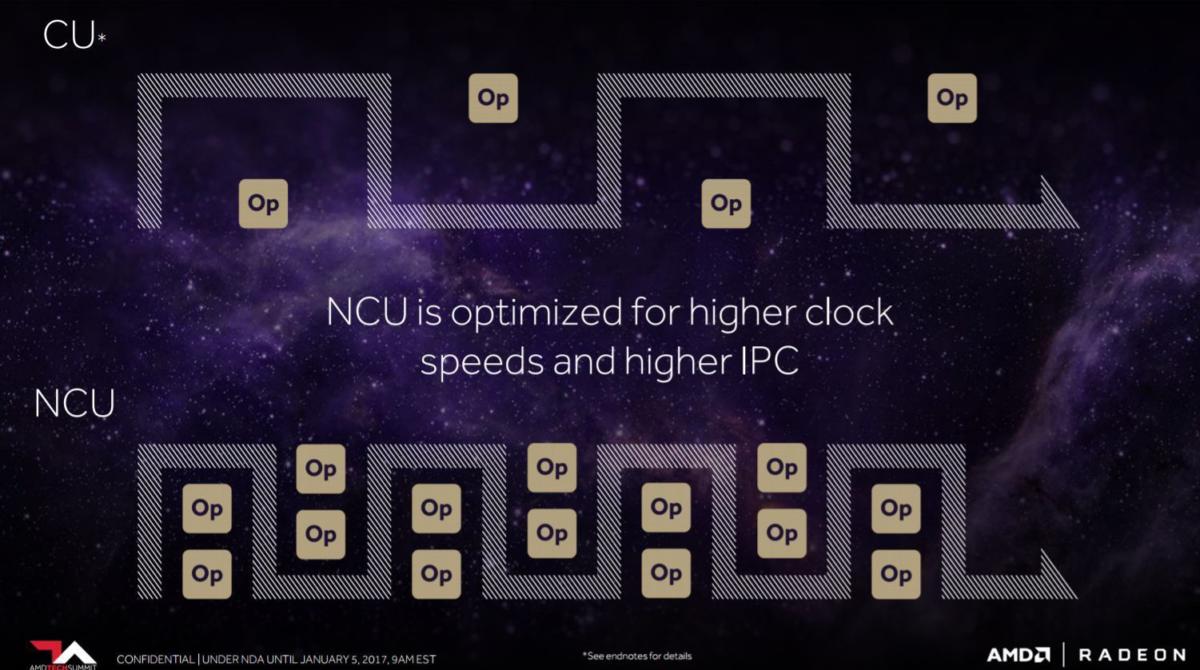

Coincidentally enough, the Vega NCU can perform two 16-bit ops simultaneously, doubled up and scheduled together. This wasn’t possible in previous AMD GPUs, Koduri says. Vega’s next-gen compute unit has been optimized for the GPU’s higher clock speeds and higher instructions-per-cycle—though AMD declined to disclose the core clock speeds for Vega just yet.

Waiting for Vega

The wait for Vega continues, but now we have some idea of the ace hidden up the Radeon Technologies Group’s sleeve. These technical teases provide just enough of a glimpse to whet the whistle of graphics enthusiasts while revealing tantalizingly little in the way of hard news relating to consumer-focused Vega graphics cards. (AMD doesn’t want to show its hand to Nvidia too much, after all.) It’s clear that AMD’s attempting some nifty new tricks to improve the efficiency and potential of Vega both in games and professional uses. Nitty-gritty details are sure to drip-drop out over the coming months.

Fingers crossed that Vega comes sooner rather than later, however. AMD teased its 14nm Polaris GPU architecture at CES 2016 but failed to actually launch the Radeon RX 480 until the very end of June. Vega’s been slapped with a release window sometime in the first half of 2017, so if AMD waits until E3 to launch this new generation of enthusiast-class graphics cards, Nvidia’s beastly GTX 1080 will have already been on the streets for a full year.

Vega looks awfully damned intriguing but even the most diehard Radeon loyalists can only wait for so long to build a new rig, especially with AMD’s much-hyped Ryzen processors launching very, very soon.