AMD Radeon RX Vega review: Vega 56, Vega 64, and liquid-cooled Vega 64

Image: Brad Chacos/IDG

Image: Brad Chacos/IDGAt a Glance

Expert’s Rating

Pros

Faster than the GTX 1070FreeSync monitors are cheaper than G-Sync monitorsCool under load

Cons

Loud fanUses more power than the competition

Our Verdict

AMD’s Radeon RX Vega 56 manages to be slightly more powerful than the GeForce GTX 1070 while generating less heat. It’s a great option for all gaming except 4K.

Best Prices Today: Radeon RX Vega 56

RetailerPrice

After months of teases and delays, the wait for Vega is finally over. First, AMD launched its flagship Radeon RX 64 lineup: the $499 Radeon RX Vega 64 and the $699 liquid-cooled Radeon RX Vega 64, which is only available as part of a convoluted “Radeon Aqua Pack” bundle. (Update: After the initial wave of availability, virtually all available air-cooled Radeon RX Vega 64 cards are only available in $599 Radeon Packs as well.) Then on August 28, the $399 Radeon RX Vega 56 hit the streets. And for the first time in a long time—over a year, in fact—the Red Team is fielding high-end graphics cards capable of challenging Nvidia’s enthusiast-class hardware.

Mentioned in this article

XFX Radeon Rx Vega 64 8GB HBM2 3xDP HDMI Graphic Cards RX-VEGMTBFX6

Price When Reviewed:$599.99Best Prices Today:$499 at Amazon

Price When Reviewed:$599.99Best Prices Today:$499 at Amazon

These are the first high-end Radeon chips built using the 14nm technology process, following in the footsteps of AMD’s mainstream-focused Polaris graphics cards. Does Radeon RX Vega wrest the performance crown from the ferocious GeForce GTX 1080 Ti? Spoiler alert: Not even close. But Vega provides an intriguing—and sometimes compelling—alternative to the GTX 1070 and GTX 1080, even if it isn’t the walk-off home run that AMD enthusiasts have been hoping for ever since Radeon marketing took a swipe at Nvidia’s next-gen Volta graphics architecture eight long months ago.

This won’t be a short review. We’re introducing AMD’s new Vega graphics architecture as well as testing three different Radeon RX Vega graphics cards based on that architecture. You can use the table of contents bar to the left to warp to the sections you’re most interested in.

This review was originally published on August 14, 2017 with Vega 64’s arrival but was updated with more information for Vega 56’s August 28 launch.

Meet Radeon RX Vega 56 and RX Vega 64

Brad Chacos/IDG

Brad Chacos/IDGAMD sent PCWorld every version of Vega for testing, including both the air-cooled and liquid-cooled versions of the Radeon RX Vega 64. While the design and cooling obviously differ, the two models share the same underlying hardware.

The water-cooled Vega 64 hits higher clock speeds (and thus higher levels of performance) thanks to its embrace of liquid, but unfortunately we can’t recommend it. The card sure looks pretty but it disappoints on pretty much every other front, doubly so because it’s available only in a pricey $699 “Radeon Aqua Pack” edition. We’ll get into the details through the review, or you can cut straight to the chase in our Buying Advice section.

AMD

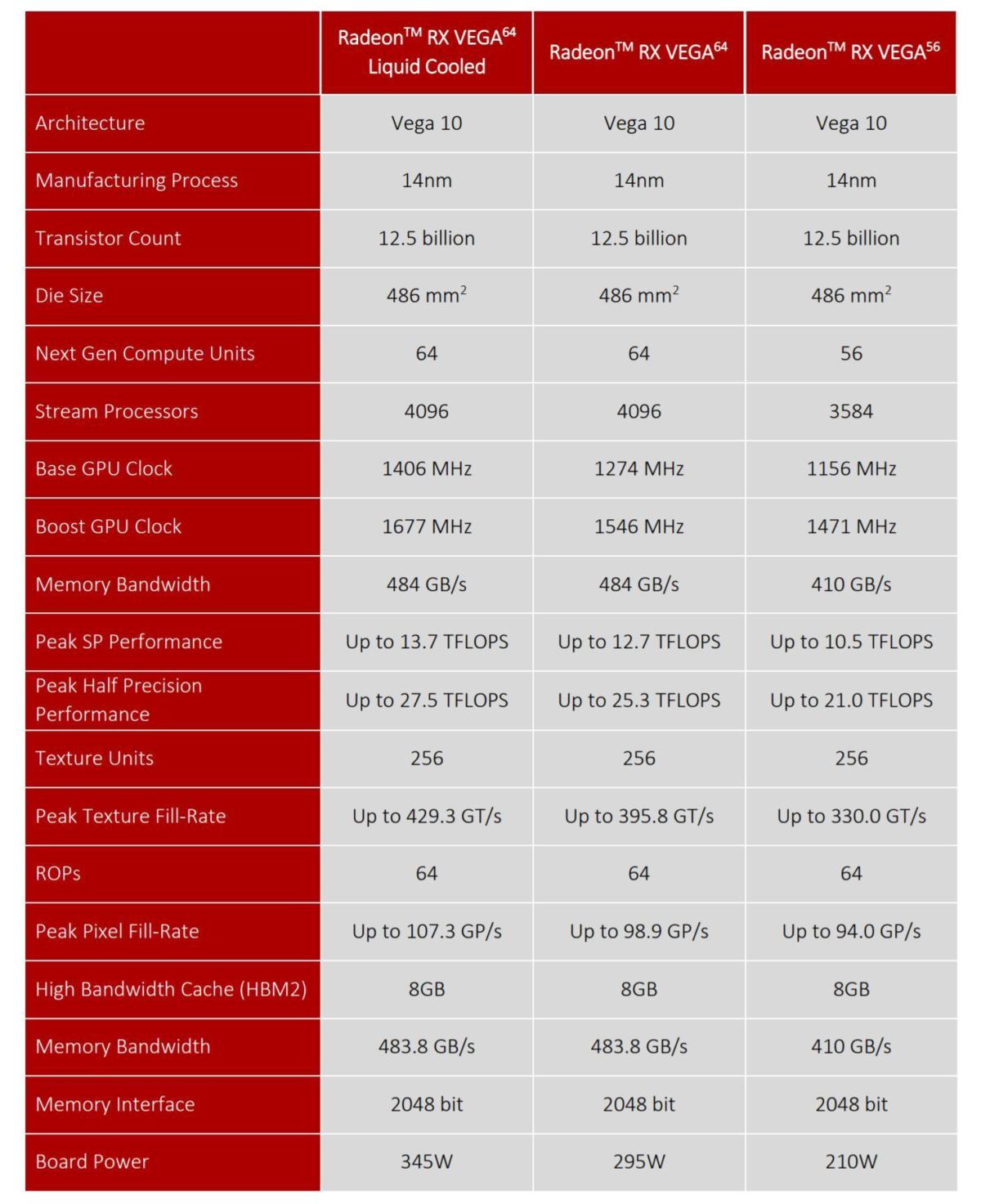

AMDVega 64’s technology mirrors that of the Fiji GPU found inside older Radeon Fury X in many ways. Both GPUs pack 4,096 steam processors, with 64 compute units (hence the name), 256 texture units, and 64 ROPs. Likewise, the Radeon RX Vega 56’s innards resemble those of the Fury X’s similarly cut-down sibling, the Radeon Fury. But the compute units inside Vega are “next-gen CUs”—more on that and other deep-level tech later—and Vega distinguishes itself in more obvious ways, too.

The Radeon Technologies Group tuned Vega to run at far higher clock speeds than its predecessor. While the Fury cards hovered around 1,000MHz, the air-cooled RX Vega 64 baselines at 1,247MHz, with a rated boost clock speed of 1,546MHz. The liquid-cooled version pushes that even further, to 1,406MHz base and 1,677MHz boost. Vega 56, meanwhile, bottoms out at 1,156MHz and boosts to 1,471MHz—or potentially more. While the boost clocks of previous Radeon cards were a hard maximum, AMD is mimicking Nvidia’s methodology for Vega. The listed boost clocks for the Radeon RX Vega 56 and 64 “represents the typical average clock speed one might see while gaming” now, to quote AMD’s reviewer’s guide. Groovy.

Brad Chacos/IDG

Brad Chacos/IDGVega’s memory capabilities took a turn for the better, too. Like the older Fury cards, Vega uses cutting-edge high-bandwidth memory. But while the Fury cards utilized first-gen HBM that was limited to 4GB of capacity—and can now struggle in some high-end games because of it—the Radeon RX Vega lineup leans on a more advanced HBM2 design with 8GB of onboard memory. At 484GBps, RX Vega 64’s memory bandwidth is actually slightly less than the Fury X’s total (it hit 512GBps) due to the use of fewer HBM stacks on-die, but still far ahead of the GTX 1080’s 320GBps via 8GB of GDDR5X memory. The 11GB GeForce GTX 1080 Ti offers identical memory bandwidth to Vega 64, while Vega 56 is slightly slower at 410GBps.

With 8GB of HBM2 you’re unlikely to hit a memory bottleneck anytime soon—that’s doubly true thanks to an innovative new feature enabled by Vega’s high-bandwidth cache controller. Again, more on Vega’s big new tech features later.

The air-cooled Radeon RX Vega 64 will be available in two forms: a basic version that looks like the reference versions of the Radeon RX 400/500-series Polaris graphics cards, only longer, and a Limited Edition with a brushed aluminum exterior. Limited Edition cards will be sold only to initial RX Vega 64 buyers until supplies last, and AMD wouldn’t say how many are being produced. All Vega cards include a backplate. We’re reviewing the standard models but snagged a pic of the Limited Edition at AMD’s Vega announcement at Siggraph.

Brad Chacos/IDG

Brad Chacos/IDG Gordon Mah Ung/IDG

Gordon Mah Ung/IDGThe Radeon RX Vega 56 looks similar to the standard RX Vega 64. While they both mime the Polaris reference cards at a quick glance, the Vega cards are longer (despite using space-saving HBM2 memory) and ditch Polaris’s plastic shell for sturdier metal materials, including a backplate. The Radeon logo on the side edge of Vega cards glows red. More importantly, the Vega cards use a vapor-chamber cooler that improves upon the basic blower fan of Polaris. In the photo below, you can compare the Vega 56 (at top) to the Radeon RX 480 reference card (at bottom).

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

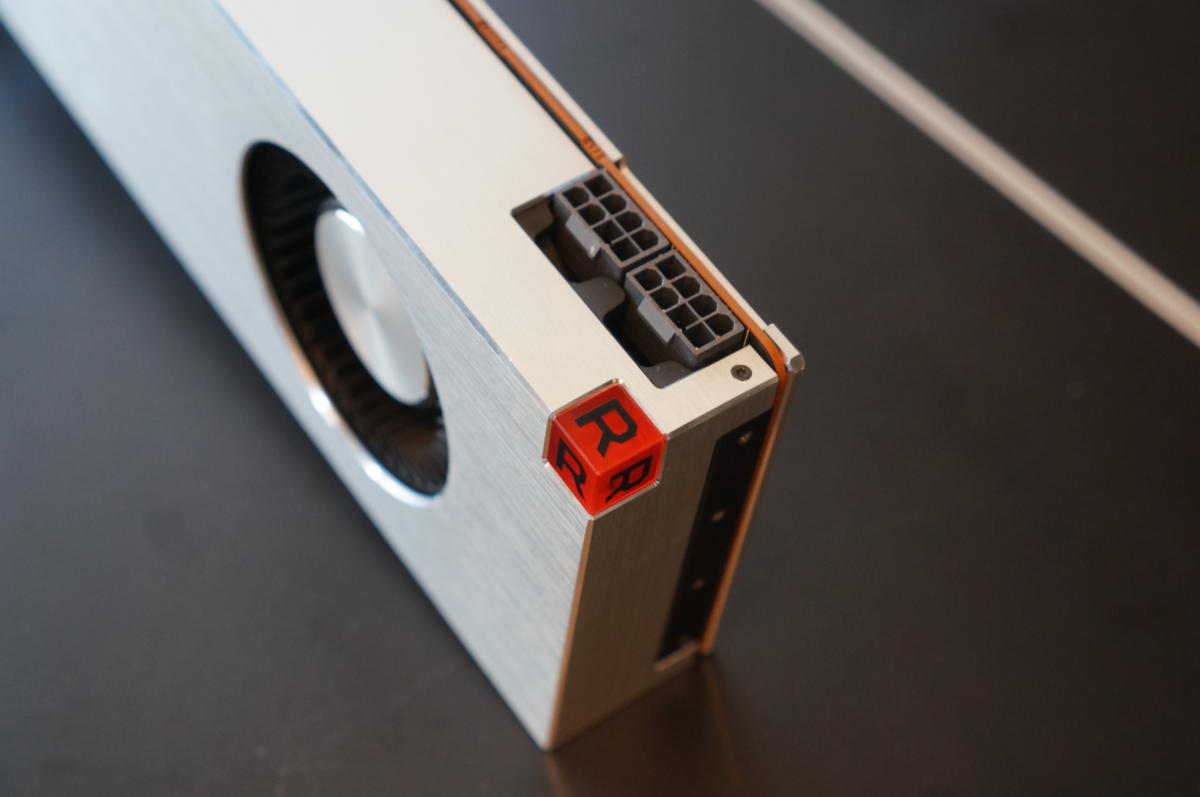

Brad Chacos/IDGWe’re also reviewing the liquid-cooled version of the Radeon RX Vega 64. It bears the same brushed-aluminum design as the air-cooled Vega 64 Limited Edition, but ditches the blower-style fan in favor of integrated water-cooling with a 120mm radiator, similar to the Fury X’s design. From its stark, unblemished face to that ruby-colored “R” in the corner, the liquid-cooled Vega 64 is gorgeous—so much so that my non-techie wife walked into my office, saw the card in its box, stopped dead in her tracks and gasped, “God, that’s beautiful.” That’s a first.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGBut any points this card gets for attractiveness are offset by the ill-conceived tubing for the liquid-cooling. The older Fury X featured shorter tubing that sprouted from the end of the card, providing just enough length to install its 120mm radiator in the optimal fan spot at the rear of your case. By contrast, the liquid-cooled Vega 64 awkwardly sprouts its tubing from the very front edge of the card, just behind the I/O bracket—and directly underneath where your radiator will likely be installed in your case. That could’ve been okay with short tubing, but Vega 64’s liquid-cooling tubes are actually longer than the Fury X’s (as you see below), yet too short to be able to comfortably route back around the end of the card.

The end result? We had to tuck the problematic bundle of liquid-cooling tubes underneath the card. It wasn’t very attractive and would’ve likely caused some headaches if we’d had other PCI-E devices installed in our mid-tower PC case. Longer tubing is handy for installing reservoirs elsewhere in your case, but this design feels like it could be more refined.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

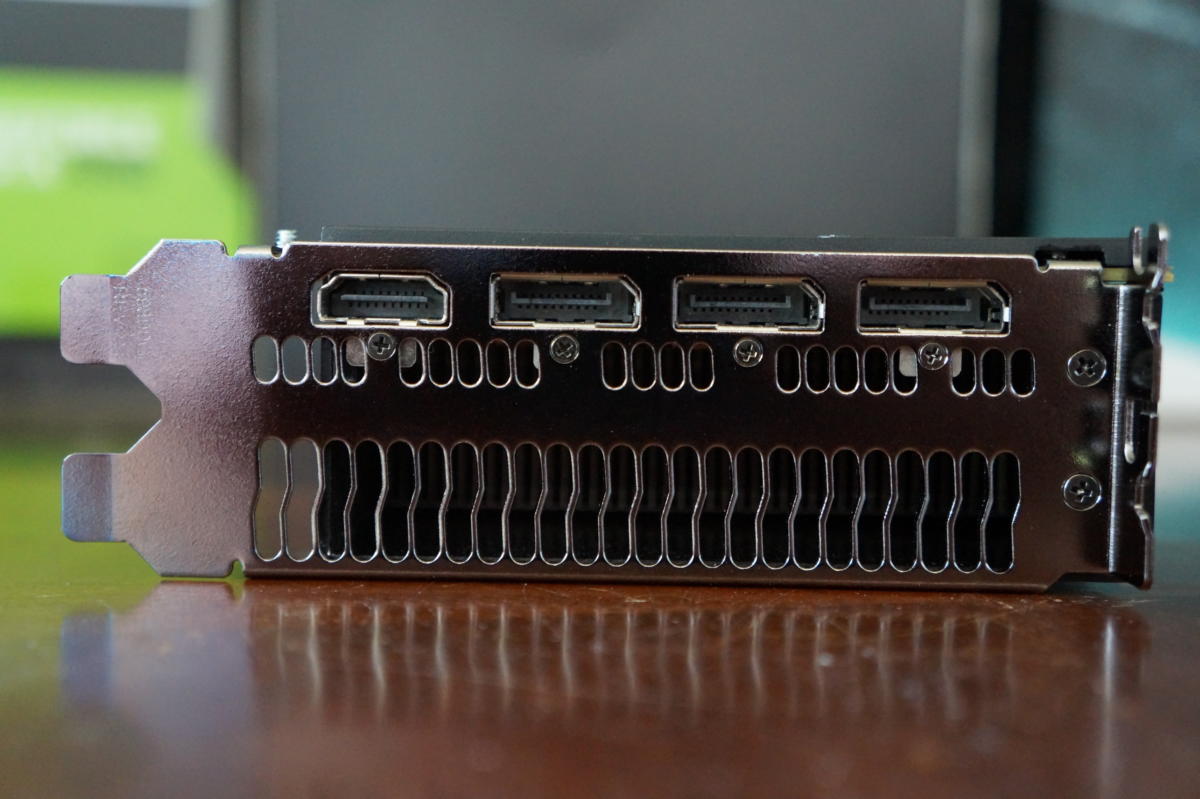

Brad Chacos/IDGRadeon RX Vega cards include a trio of DisplayPort 1.4-ready outputs and an HDMI 2.0 port. The architecture’s updated display engine can support up to two 4K/120Hz panels, or a trio of 4K/60Hz displays. That’s a whole lot of pixels! And of course, Vega cards support AMD’s FreeSync technology, which eradicates stuttering and screen-tearing on compatible monitors. It’s a major factor in the value proposition that Radeon marketing is pushing for Vega.

Brad Chacos/IDG

Brad Chacos/IDGBoth versions of the Radeon RX Vega 64 also sport the nifty “GPU tachometer” feature that debuted with Fury. A line of LED lights above the power connectors flash to life correlating with the GPU load. The harder you put the pedal to the metal, the more lights flare up.

Brad Chacos/IDG

Brad Chacos/IDGIt’s silly, but I adored it in Fury and I still adore it now. There’s just something satisfying about seeing the GPU tachometer start shining when you boot up a game.

Next page: Vega’s customizable power profiles

Radeon RX Vega power profiles

Gordon Mah Ung/IDG

Gordon Mah Ung/IDGWhat we used for testing

EVGA SuperNOVA 1000 G3 80 Plus Gold power supply

Price When Reviewed:$169.99Best Prices Today:$389.99 at Amazon

Price When Reviewed:$169.99Best Prices Today:$389.99 at Amazon

All Radeon RX Vega cards also pack a pair of 8-pin power connectors, and for good reason. The air-cooled version of Vega 64 is rated for a whopping 295 watts of total board power, and the liquid-cooled model pushes that all the way to 345W. By contrast, Nvidia’s GTX 1080 has a 180W TDP and only requires a single 8-pin power connector. Vega 56, on the other hand, has a less imposing 210W TDP.

AMD’s liquid-cooled Vega 64 review box explicitly states that the card needs a minimum of a 1,000W power supply, compared to the air-cooled version’s 750W requirement. Hot damn. You’ll be able to get by with a less-powerful PSU if you have a quality 80 Plus-rated one, though.

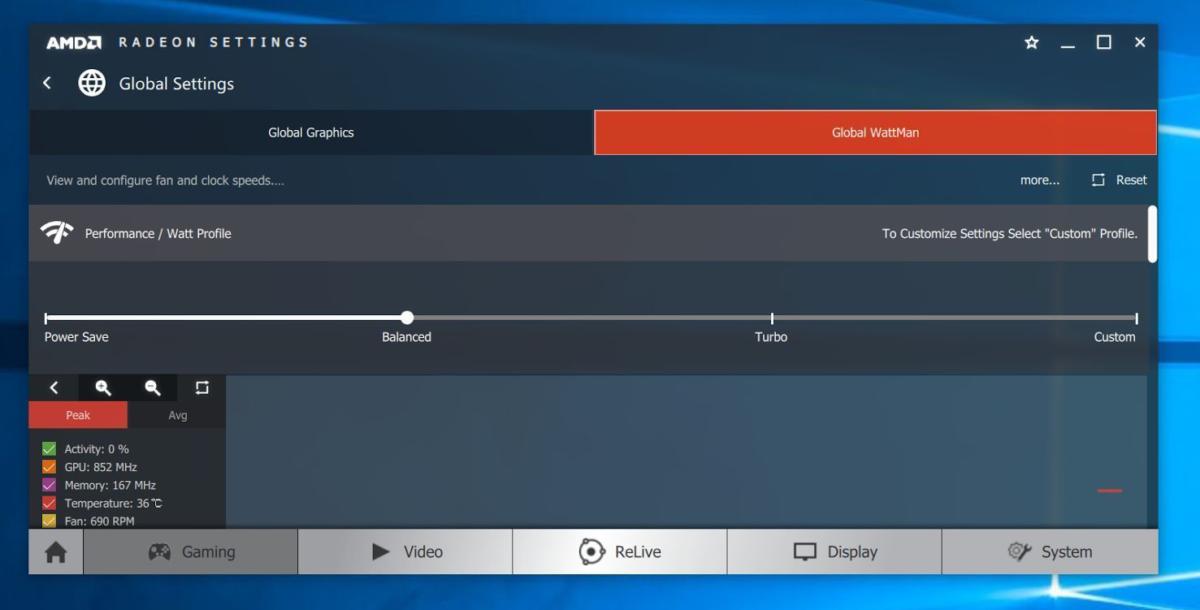

Brad Chacos/IDG

Brad Chacos/IDGAMD is combating those power concerns by introducing six different power profiles for Radeon RX Vega. If you open the Global Wattman overclocking section of Radeon Software’s settings, you’ll find a new “performance profile” slider. By default, it’s set to a Balanced profile, which balances performance and energy/acoustic considerations. You can also opt to use a Power Save profile, a full-throttle Turbo profile, or create a custom plan. (Don’t forget to click Apply to make your decision stick.)

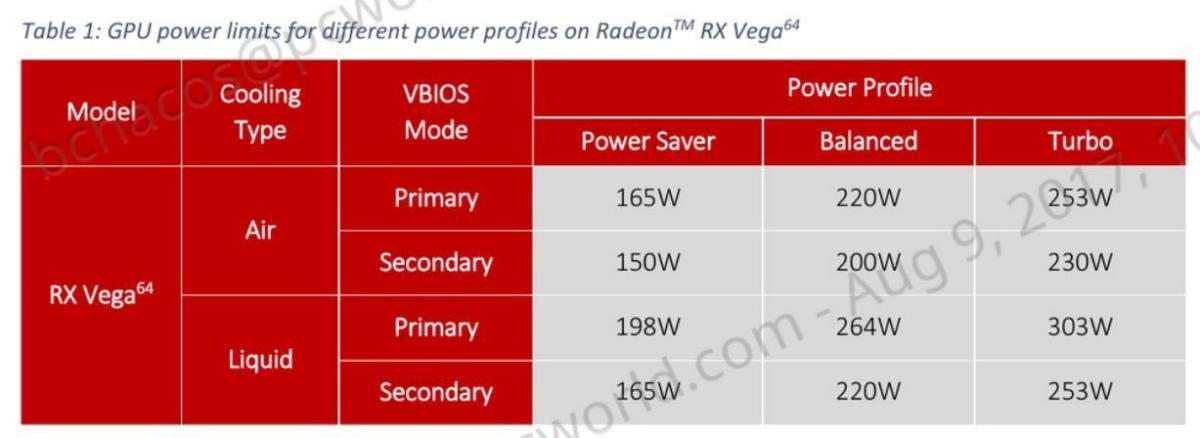

But wait! That’s not all. Radeon RX Vega also includes dual BIOSes, swappable via a tiny toggle switch on the edge of the card, over the “Radeon” branding. The secondary BIOS uses the same performance profiles as the first, but uses even less power—significantly so, in the case of the Turbo profile. Here are the GPU power limits for each profile on the air- and liquid-cooled Vega 64 cards, per AMD:

AMD

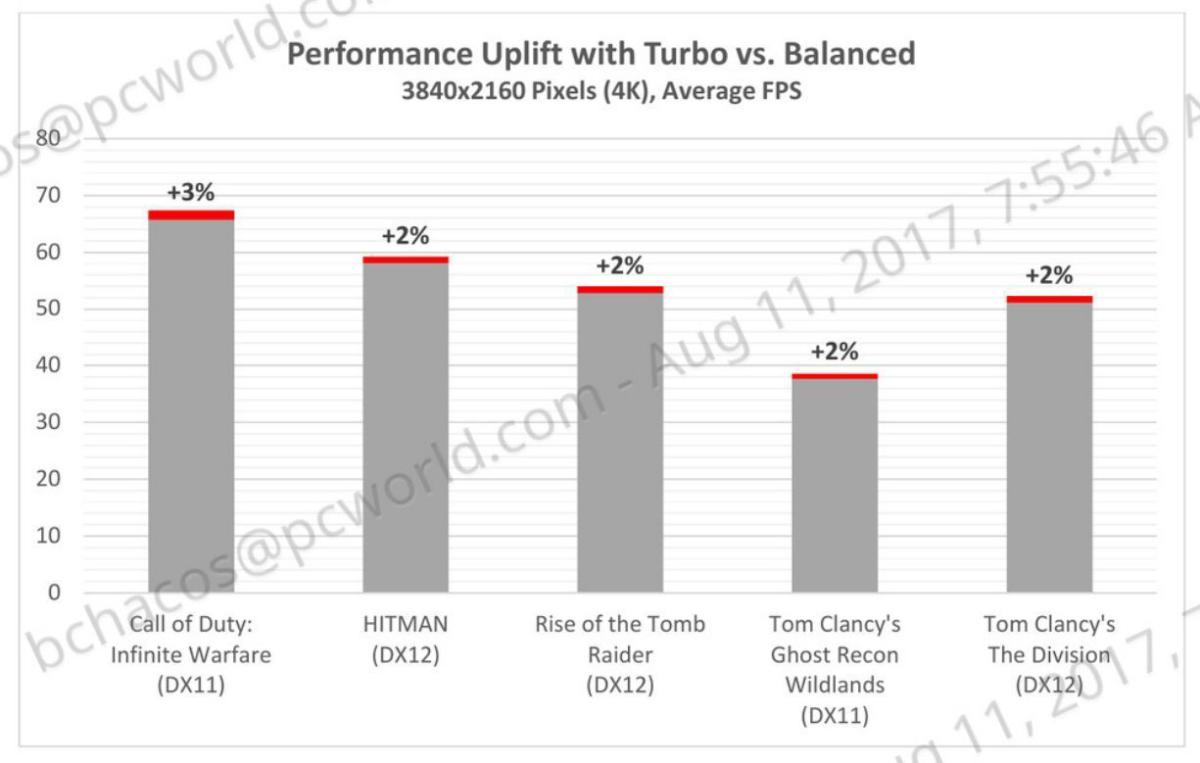

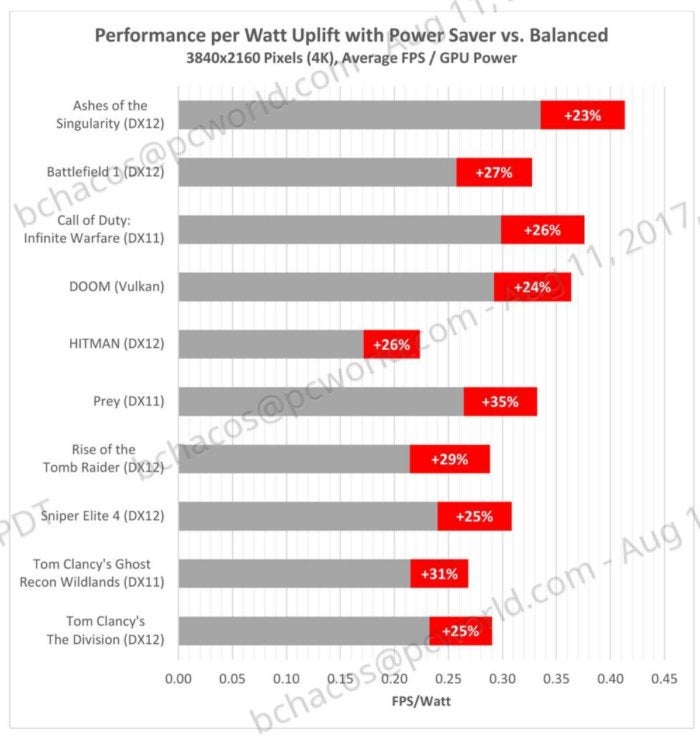

AMDUnfortunately, time constraints prevented us from testing the various power profiles extensively. AMD says activating Turbo mode in the default BIOS adds just 2 to 3 percent more performance across several games, but didn’t declare how much power it uses. Conversely, the company says activating the Power Save profile improves performance-per-watt significantly, though its materials don’t directly show the mode’s effect on overall frame rates. Here’s a look at what’s in AMD’s reviewers guide (and my email address), on a Core i7-7700K system with 16GB of Corsair’s 3,000MHz Vengeance LPX DDR4 memory:

AMD

AMD AMD

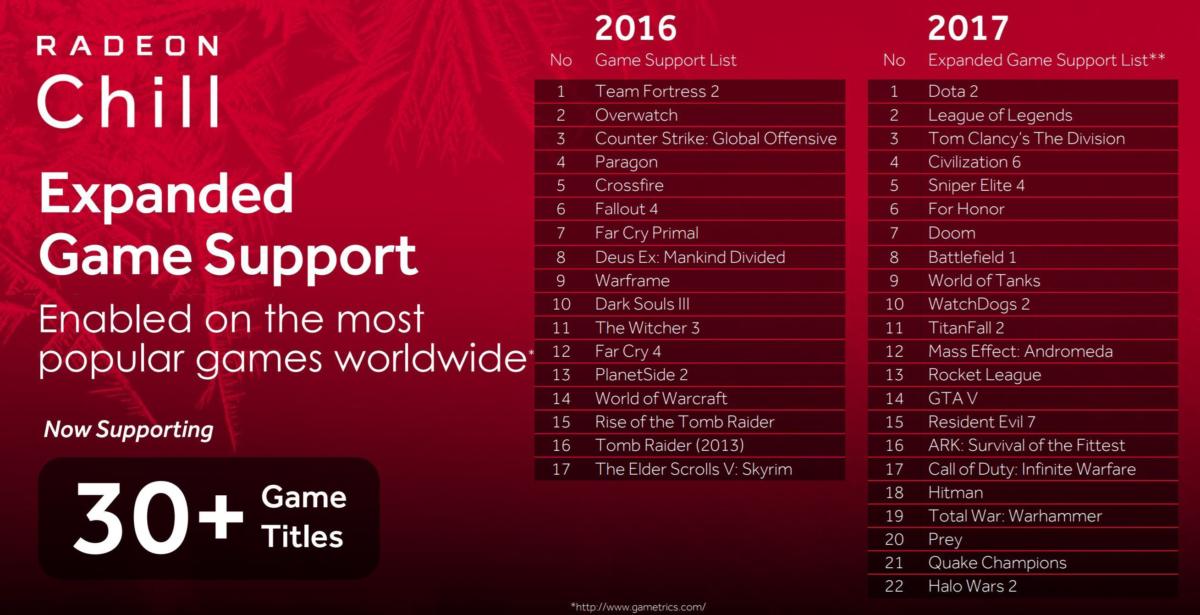

AMDAMD is also (rightfully) keen to point out the power-saving features baked into its Radeon Software. The Radeon Chill feature introduced in Radeon Software Crimson ReLive can greatly reduce overall power use by detecting your inputs and intelligently ramping down the GPU when you’re idle. Unfortunately, it’s off by default and limited to whitelisted games, but that list is up to almost 40 of the most popular games around, like Witcher 3, Fallout 4, Battlefield 1, Skyrim, GTA V, Rocket League, and all the major e-sports titles. If you play any of the games, be sure to enable Chill for it in Radeon Settings.

AMD

AMDRadeon Software also includes a Frame Rate Target Control feature that lets you cap your target frame rate manually to save even more power and reduce noise output. If you have a 60Hz monitor, for example, you could set FRTC to 60fps and prevent your GPU from pumping out frames that would go unseen. You might not want to do that in Twitch-based games where keeping latency to a minimum takes priority, though. You can enable FRTC in the Global Settings section of Radeon Software’s Gaming tab.

But enough about the basics. Let’s dig into Vega’s most noteworthy new technical features.

Next page: Vega’s new tech

Radeon RX Vega: New tech features

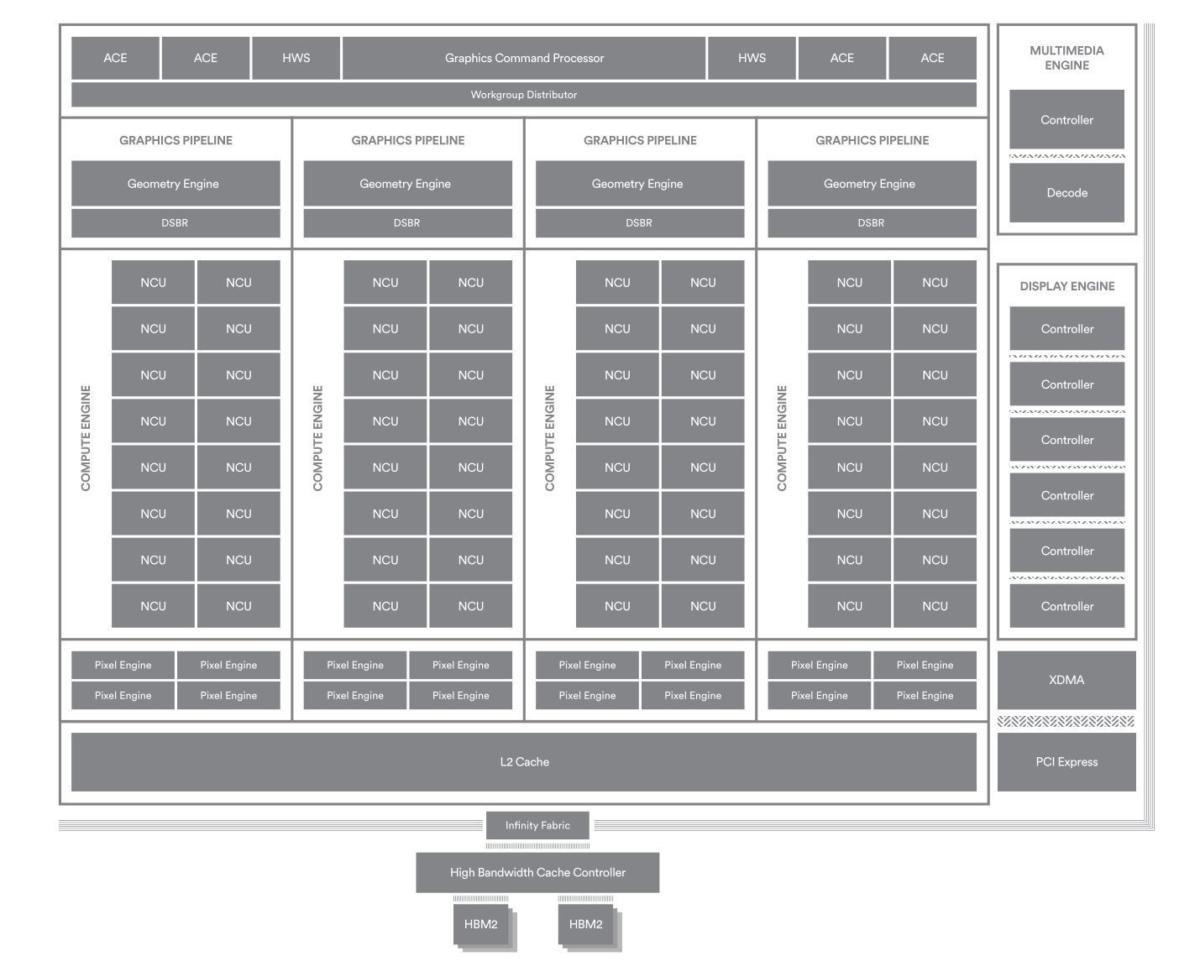

AMD

AMDA block diagram of RX Vega’s GPU design.

Few of Radeon RX Vega’s new underlying features come as a surprise, as AMD already pulled back the curtain on the key details during the Vega technical preview at CES in January. We’ll cover the highlights that will most likely make a difference to everyday gamers here, but hit that link for more details, or check out this white paper on Vega’s architecture.

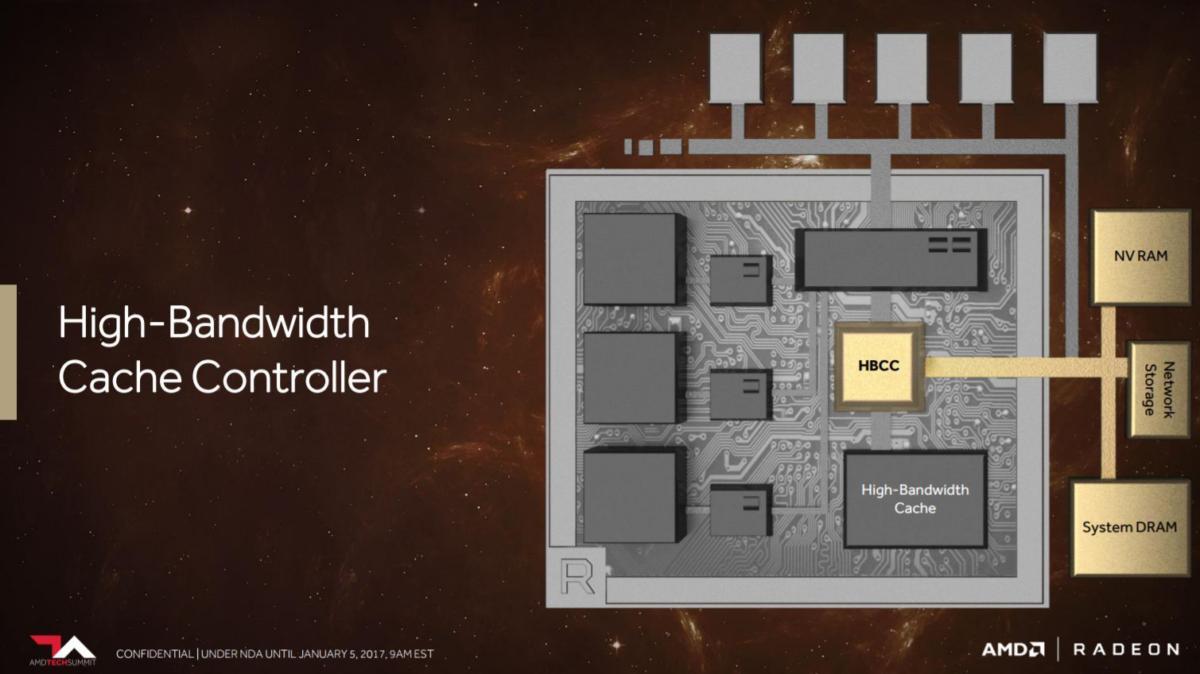

Most interesting might be Vega’s revolutionary new high-bandwidth cache and high-bandwidth cache controller, which creates what Radeon boss Raja Koduri calls “the world’s most scalable GPU memory architecture.”

AMD

AMDAs we wrote in the technical preview, the high-bandwidth cache replaces the graphics card’s traditional frame buffer, while the cache controller provides fine-grained control over data and supports a whopping 512 terabytes—not gigabytes, terabytes—of virtual address space. Vega’s HBM design can expand graphics memory beyond onboard RAM to a more heterogeneous memory system capable of managing several memory sources at once.

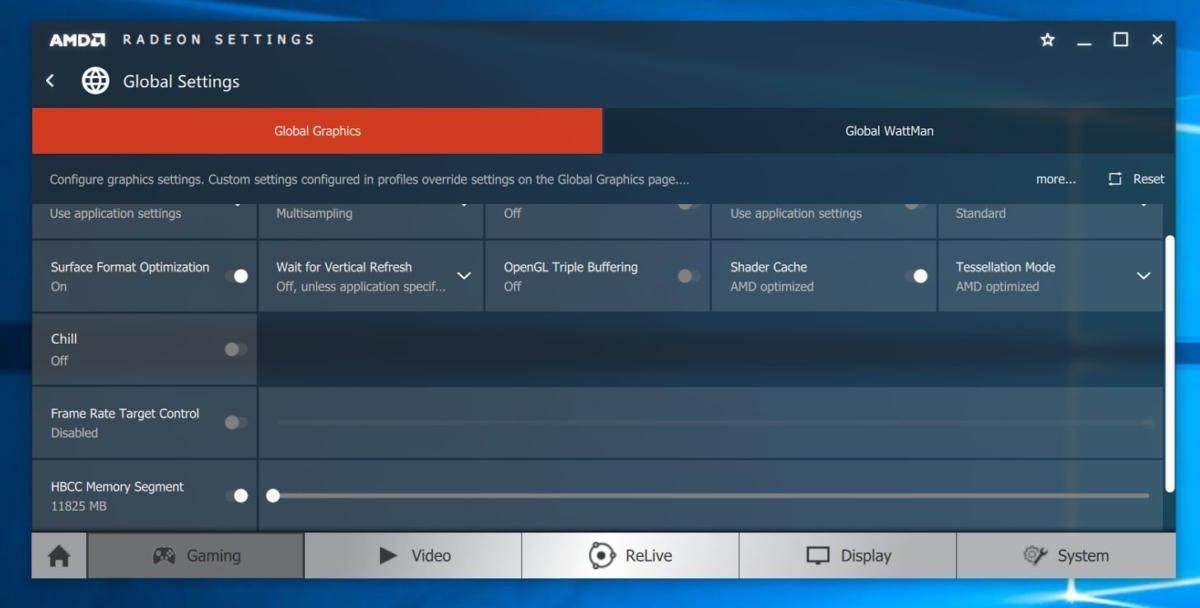

That sounds like a feature best suited to data center workloads—and AMD is indeed rolling out Radeon Instinct cards for machine learning based around the Vega architecture—but a new Radeon Settings feature makes it useful for gamers, too. If you open Radeon Software’s Gaming tab and head to the Global Settings, you’ll see a new “HBCC Memory Segment” section.

Brad Chacos/IDG

Brad Chacos/IDGSee the “HBCC Memory Segment” slider at the bottom?

That slider lets you allocate a portion of your system’s main RAM to gaming, combining with RX Vega’s 8GB of onboard HBM2 to create a larger memory pool. “The high-bandwidth cache controller will monitor the utilization of bits in local GPU memory and, if needed, move unused bits to the slower system memory space, effectively increasing the size of the GPU’s local memory,” AMD explains.

That sounds awfully intriguing, and it could theoretically prevent stuttering and slowdowns in extremely taxing games. Unfortunately, time constraints prevented us from testing the feature, and to be honest, using more memory than the native 8GB of HBM2 can handle might prove difficult. Color me excited though, especially because AMD says the HBCC can also improve overall memory utilization in games, raising minimum frame rates.

Vega also includes revamped “next-gen compute units” that can perform two 16-bit ops (aka FP16) simultaneously, which isn’t possible in previous AMD GPUs. AMD calls the feature “rapid packed math,” as you can see in the video above. Rapid-packed math wouldn’t work with all aspects of a game, but functions that can utilize it (like some lighting, procedural, and post-processing effects) can essentially be doubled, as demonstrated in February Vega demo where enabling RPM allowed a system to render 1,200,000 hair strands per second, as opposed to 550,000 with RPM disabled.

Mentioned in this article

Far Cry 5 [Online Game Code]

![Far Cry 5 [Online Game Code]](https://images-na.ssl-images-amazon.com/images/I/6184ZiTtwlL.jpg?quality=50&strip=all) Price When Reviewed:$59.99Best Prices Today:$59.99 at Amazon

Price When Reviewed:$59.99Best Prices Today:$59.99 at Amazon

Far Cry 5 will support rapid packed math. In the video above, Steve McAuley, the game’s 3D technical lead, says the game will run faster, at a higher frame rate, and at a more stable frame rate as well. Wolfenstein II: The New Colossus will also support RPM in some way.

AMD

AMDVega has a couple of other features designed to improve performance by working smarter, not harder. Rendering a scene is a complex process. A new programmable geometry pipeline can use primitive shaders to identify polygons that aren’t visible to the player and cull them quickly, allowing the GPU to start rending the geometry you can actually see faster. Yay, efficiency!

Vega’s pixel engine introduces a “draw stream binning rasterizer” that also improves efficiency and, hence, performance. After the geometry engine performs its (already reduced amount of) work, Vega identifies overlapping pixels that won’t be seen by the user and thus don’t need to be rendered. The GPU then discards those pixels rather than wasting time rendering them. DSBR should also reduce the load on RX Vega’s memory. It’s similar to the tile-based rendering that helped give Nvidia’s GeForce graphics cards a tremendous boost in efficiency starting with the Maxwell architecture, though it remains to be seen whether AMD’s solution is as effective.

And get this: Vega is fully compliant with Microsoft’s DirectX 12 level 12.1 features.

Next page: Radeon Packs and our test system details

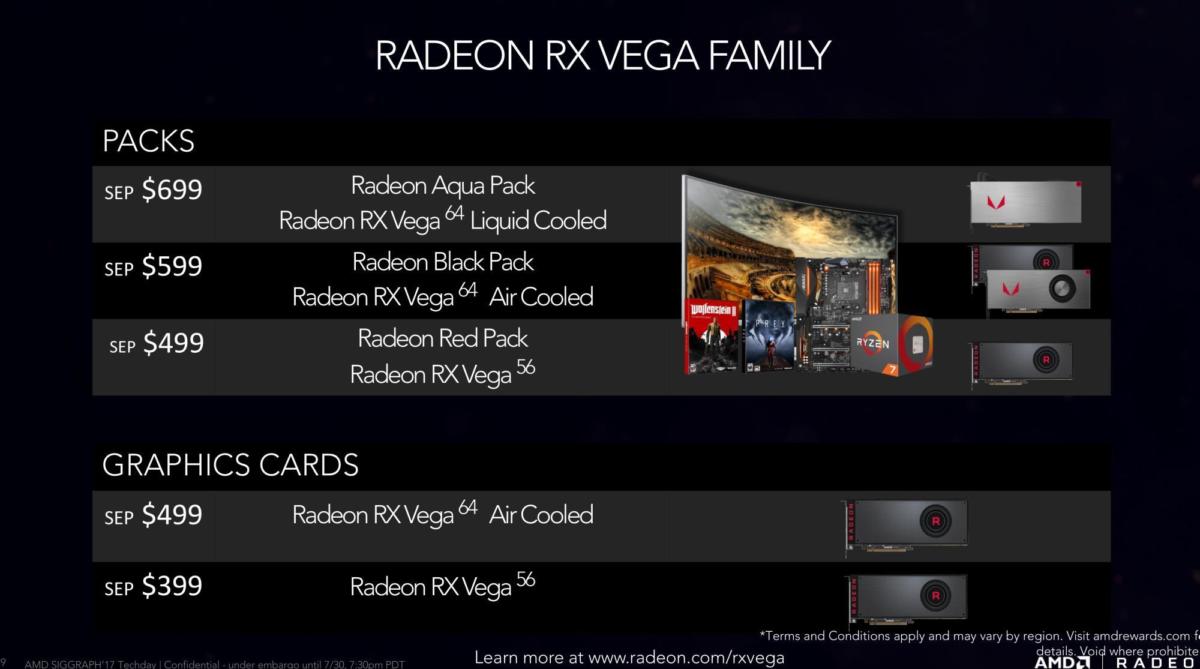

RX Vega Radeon Pack bundles

Before we dive into performance testing, it’s worth noting the unconventional way Radeon RX Vega is being sold. Sure, you can buy standalone versions of the air-cooled Radeon RX Vega 56 and RX Vega 64 at their respective $399 and $499 price points, but AMD’s also selling the graphics cards at a $100 markup with “Radeon Packs” that offer bundled games and another $300 in discounts on Ryzen and FreeSync hardware. The liquid-cooled RX Vega 64 is only available as part of the $699 Radeon Aqua Pack. You can’t buy it standalone.

AMD

AMDIt sounds simple enough, but the situation’s actually somewhat complex. (You don’t actually have to buy the extra hardware if you pick up a Radeon Pack edition of a Vega card, for example.) Head over to PCWorld’s RX Vega Radeon Pack explainer for a full breakdown. One crucial tidbit: It remains to be seen how much Vega stock gets allocated to standalone versions and how much gets set aside for Radeon Packs. Here’s what AMD says about the situation:

“We can’t break out volumes, but we’re working to ensure ample quantities of both standalone cards and the Radeon Packs so that gamers can get exactly what they’re looking for.”

Update: Very, very few standalone cards seemed to be available for Vega 64’s launch, with the vast majority of stock allocated for Radeon Packs. Standalone cards disappeared quickly and pricing for all models leaped up by $100 or more at retail mere hours after release. Radeon RX Vega 56 cards disappeared instantaneously on August 28 as well.

Enough talk. Let’s benchmark!

Our test system

We tested the Radeon RX Vega 64 on PCWorld’s dedicated graphics card benchmark system. Our testbed’s loaded with high-end components to avoid bottlenecks in other parts of the system and show unfettered graphics performance. Some secondary details differ from prior reviews, however, as we’re in the process of upgrading to a more modern testing system. The case, power supply, and SSD model have changed, but the core aspects remain the same as before, and we retested all of the cards

Intel’s Core i7-5960X with a Corsair Hydro Series H100i closed-loop water cooler ($110 on Amazon)An Asus X99 Deluxe motherboard16GB of Corsair’s Vengeance LPX DDR4 memory ($148 on Amazon)EVGA Supernova 1000 G3 power supply ($200 on Amazon)A 500GB Samsung 850 EVO SSD ($175 on Amazon)Corsair Crystal Series 570X case, deemed Full Nerd’s favorite case of 2016 ($180 on Amazon)Windows 10 Pro ($180 on Amazon)

Mentioned in this article

Corsair Crystal Series 570X mid-tower case

Price When Reviewed:$179.99

Price When Reviewed:$179.99

I’d hoped to perform more extensive testing on Radeon Vega, going so far as to prepare a Ryzen 1800X system ahead of time to provide benchmarks on both AMD- and Intel-based systems. I’d also hoped to test the cards’ hash rates for mining. Unfortunately, some hardware failures in our main test rig and an extremely tight testing window (AMD shipped the Vega cards to us painfully close to the deadline) prevented me from doing more than standard games testing. A version of Wattman that enabled Vega overclocking arrived over the weekend, so I wasn’t able to dabble in that, either.

The limited testing time and hardware failures also affected our lineup of tested graphics cards. We’re reviewing the $399 Radeon RX Vega 56, $499 air-cooled RX Vega 64, and $699 liquid-cooled RX Vega 64, of course. All were benchmarked using the Balanced power profile on the stock BIOS. We weren’t able to retest the Fury X, Vega’s HBM-packing predecessor. The card was a bit slower than the GTX 1070 when Nvidia’s card launched in May 2016.

Brad Chacos/IDG

Brad Chacos/IDGWe also retested the cards’ natural competitors, the (theoretically) $350 Nvidia GeForce GTX 1070 and $500 GTX 1080. We’re also including the reference $700 GTX 1080 Ti, with performance results from April drivers. Our Founders Edition card suffered an early death shortly thereafter, so we weren’t able to retest it with Nvidia’s latest drivers but wanted to include the numbers as a reference point.

Mentioned in this article

PNY GeForce GTX 1080 Ti XLR8

Price When Reviewed:$759.99

Price When Reviewed:$759.99

Because we’ve added a couple of new games to our suite for this review, we’re also including results from the $735 PNY GTX 1080 Ti XLR8, a GTX 1080 Ti variant with a custom cooler, a slight overclock, and a very modest markup over the reference version. We try not to mix reference and custom models in reviews, but it felt warranted in this case—especially considering the liquid-cooled Vega 64’s price tag.

Each game is tested using its in-game benchmark at the mentioned graphics presets, after disabling VSync, frame rate caps, and all GPU vendor-specific technologies—like AMD TressFX, Nvidia GameWorks options, and FreeSync/G-Sync. Given the capabilities of these cards, we tested the Vega 64, GTX 1080, and GTX 1080 Ti at 1440p and 4K resolution. The Vega 56 and GTX 1070 were tested at 1080p, 1440p, and 4K. They’re versatile!

Next page: The Division benchmarks

Radeon RX Vega: Benchmarks galore

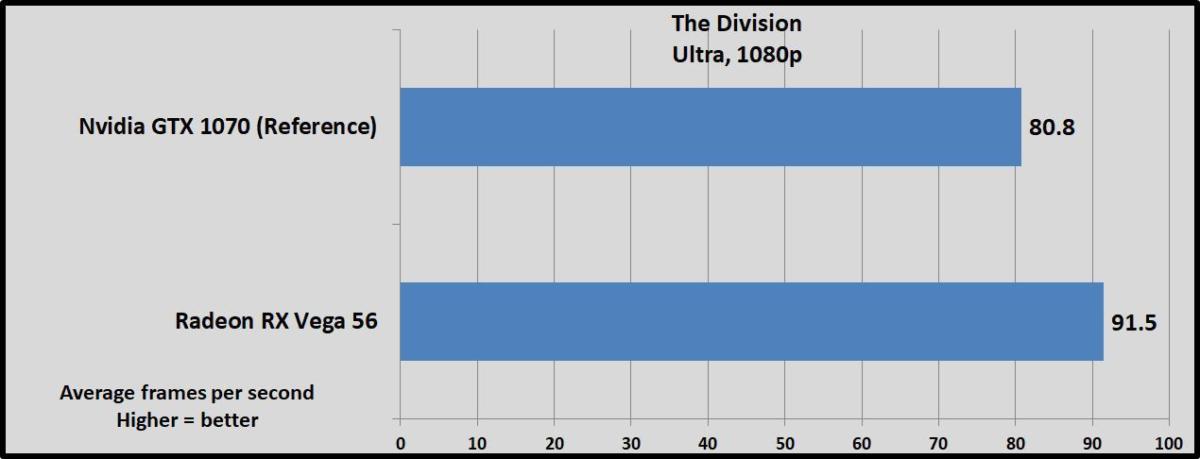

The Division

The Division, a gorgeous third-person shooter/RPG that mixes elements of Destiny and Gears of War, kicks things off with Ubisoft’s Snowdrop engine. We test the game in DirectX 11 mode.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGVega 56 trounces the GTX 1070 Founders Edition by roughly 13 percent across all resolutions. Chalk that up as a solid win for AMD. The air-cooled Vega 64 and GTX 1080 Founders Edition essentially trade blows, with the liquid-cooled Vega getting a decent 7 to 9 percent uplift from its cooler temperatures and higher clock speeds. The similarly priced GTX 1080 Ti blew away all comers though, and—spoiler alert again—will continue to do so without testing.

Minimum frame rates aren’t listed in this review. We tend to mention that metric only when it’s an issue, and it wasn’t. All of these cards pump out smooth gameplay in the games we tested.

Next page: Ghost Recon: Wildlands

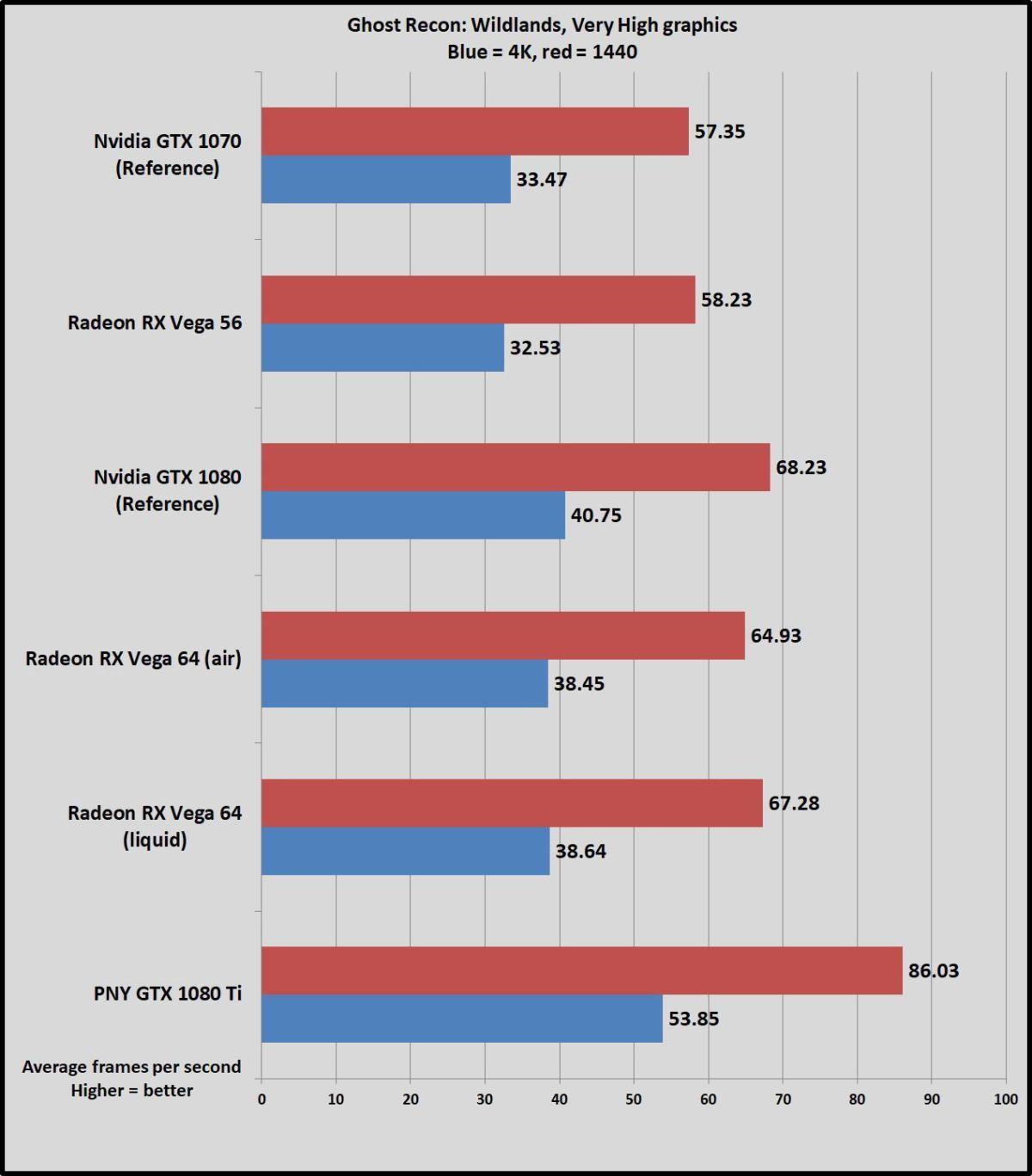

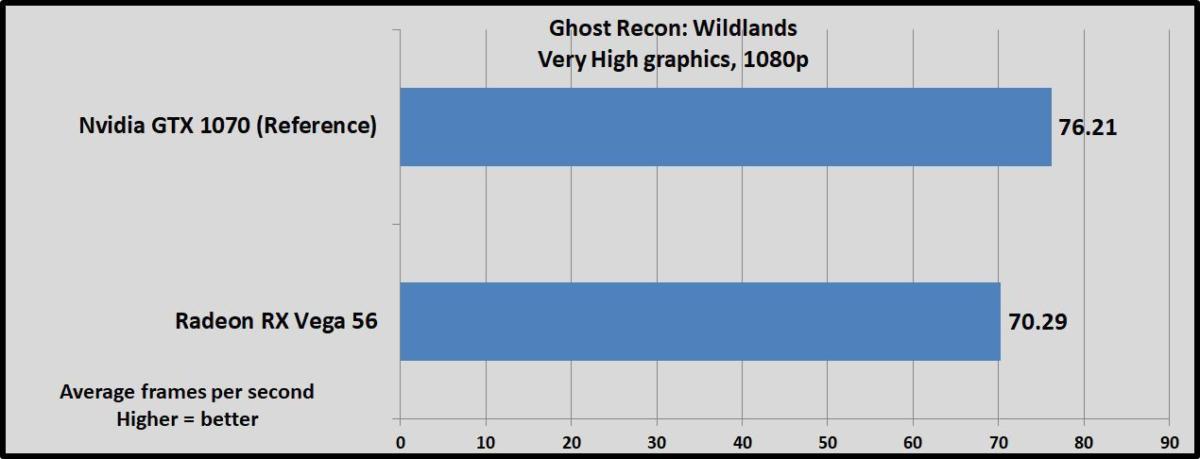

Ghost Recon: Wildlands

Next up: Ghost Recon: Wildlands, a drop-dead gorgeous and notoriously punishing game based on Ubisoft’s Anvil engine. Not even the GTX 1080 Ti put in a decent showing at Ultra graphics settings at 4K, so we dropped down to Very High, which “is targeted to high-end hardware.” It’s a game that includes some Nvidia GameWorks features, but again, we test with those disabled.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGUnless you’re running a GTX 1080 Ti or willing to reduce graphics settings below what we tested here—and for reference, dropping down to the High preset only added 3fps of performance to the air-cooled Vega 64—this game is much better suited to 1440p than 4K. The Vega 56 draws even with the GTX 1070 at those resolutions in this Nvidia-leaning game, though the GeForce card opens the lead a bit at 1080p.

Likewise, the GTX 1080 maintains a 5 percent lead over both Vega 64 models at 4K, which only amounts to a couple of frames per second in practice. It keeps that advantage over the air-cooled Vega 56 at 1440p, though the liquid-cooled version closes the gap.

The PNY GTX 1080 Ti XLR8 still carries the performance torch, with a 32 percent performance advantage over the GTX 1080 at 4K, and 26 percent performance advantage at 1440p. The GTX 1080 Ti Founders Edition would likely be roughly 5 percent slower.

Next page: Deus Ex: Mankind Divided

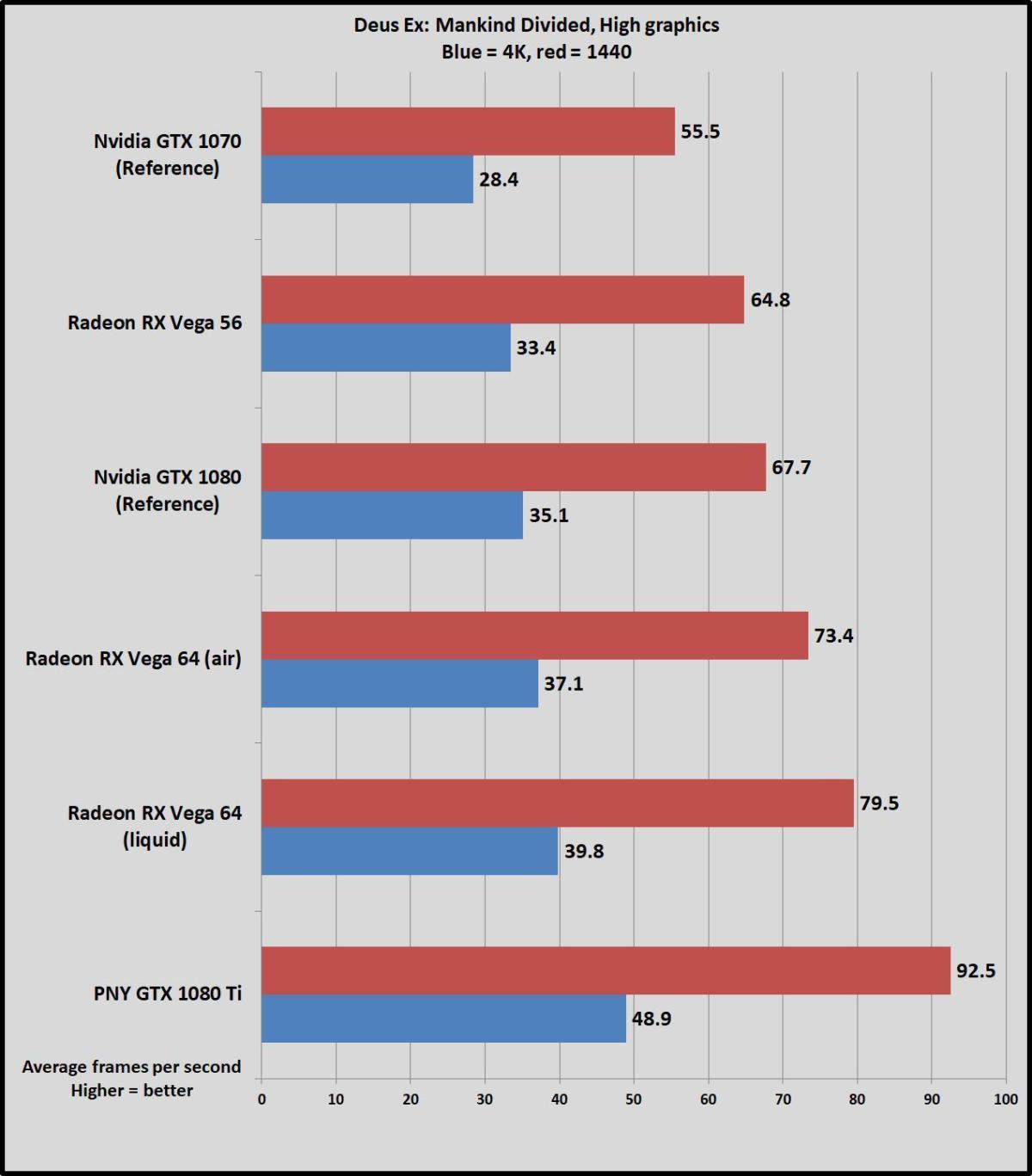

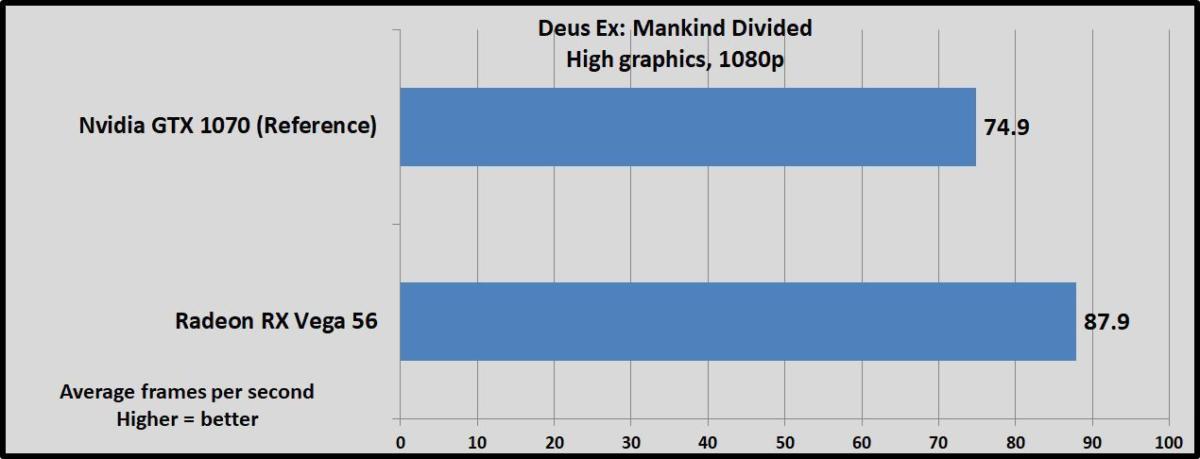

Deus Ex: Mankind Divided

Now it’s time for another graphically punishing game, but this one favors AMD hardware. Deus Ex: Mankind Divided replaces Hitman in our test suite because its Dawn engine is based upon the Glacier Engine at Hitman’s heart. We dropped all the way down to the High graphics preset for this one and still struggled at 4K. We tested in DirectX 12, as that mode raises all performance boats regardless of which brand’s GPU sits at the heart of your graphics card.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGNo surprise here: AMD wins across the board. Once again, Vega 56 surpasses the GTX 1070 Founders Edition by 12 to 13 percent depending on resolution. Vega 64 especially dominates the GTX 1080 at 1440p resolution, and the liquid-cooled version’s higher clock speeds give it a sizeable 8 percent boost over the air-cooled model. The liquid-cooled version delivers 17.5 percent more performance than the GTX 1080 Founders Edition at that resolution.

Even the overclocked, custom-cooled PNY GTX 1080 Ti XLR8 fails to come anywhere near the 60fps gold standard at 4K. The GTX 1080 Ti Founders Edition would likely be roughly 5 percent slower.

Next page: Rise of the Tomb Raider

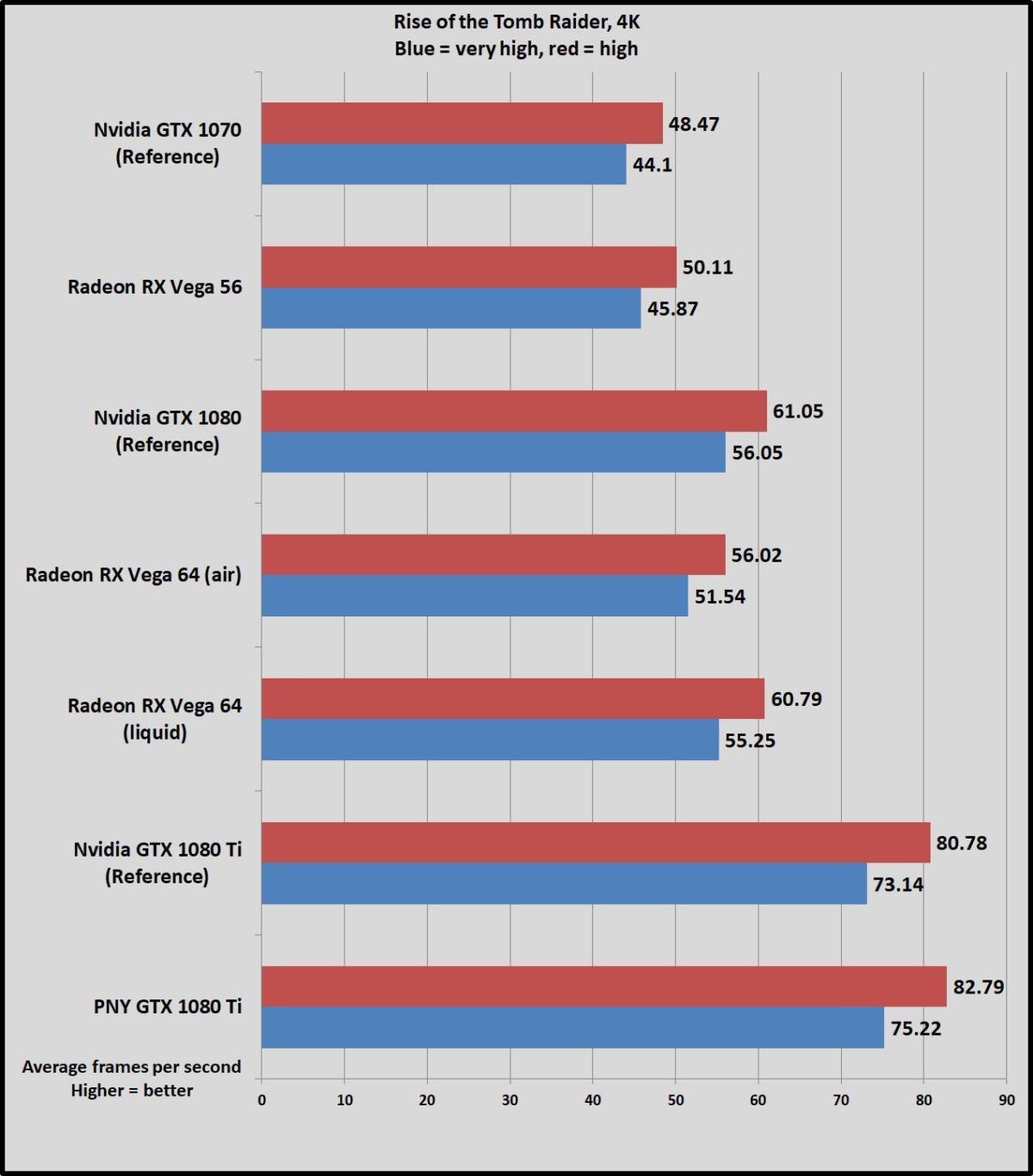

Rise of the Tomb Raider

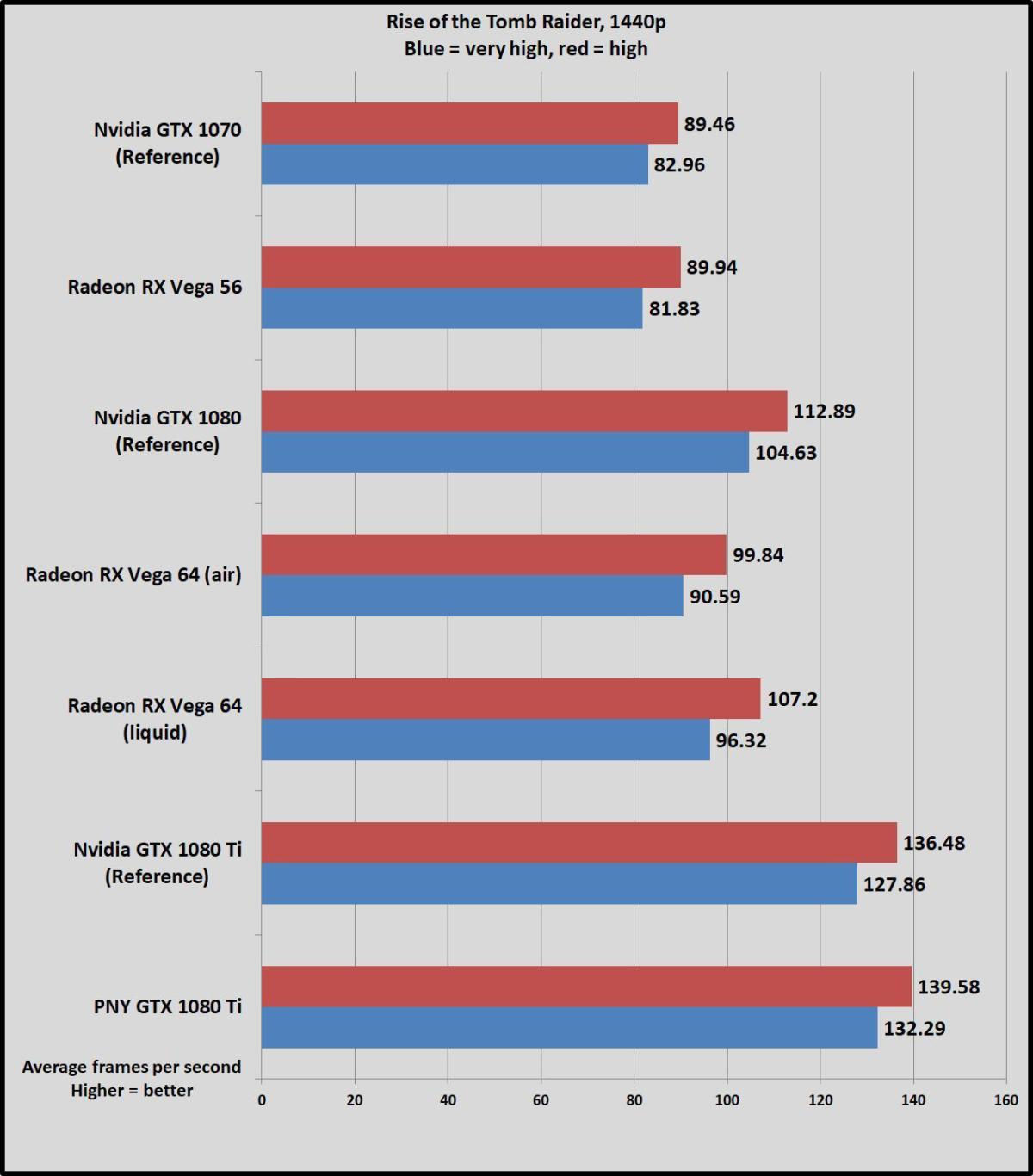

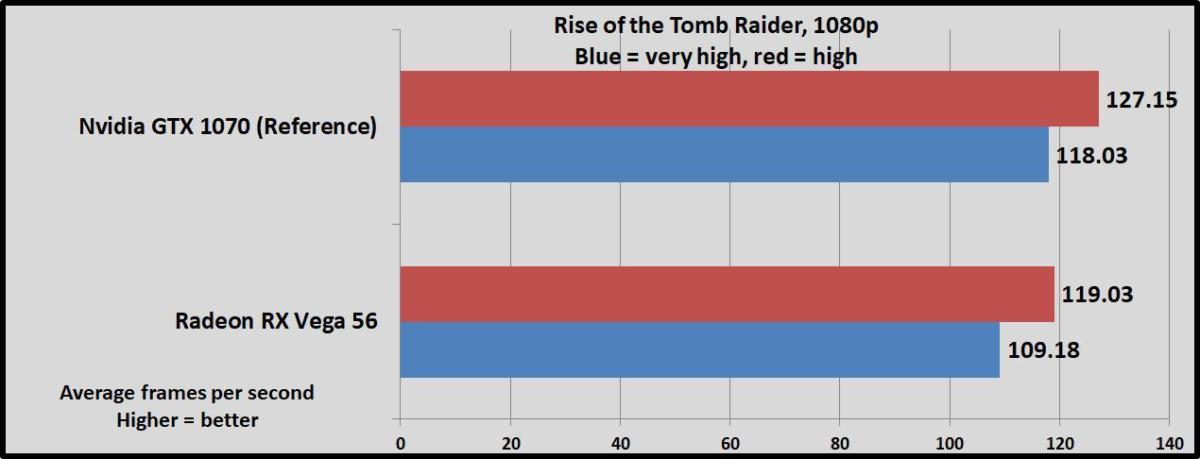

Rise of the Tomb Raider tends to perform better on GeForce cards, on the other hand. It’s utterly gorgeous and one of the first games to receive specific optimizations for AMD’s new Ryzen processors (not that it matters here).

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGVega 56 manages to hang blow-for-blow with the GTX 1070 even in this best-case scenario for GeForce cards—impressive indeed. Vega 64 churns out decent frame rates of its own, but isn’t as fast as the GTX 1080 Founders Edition, even with a decent performance boost in liquid-cooled form. The gap is most noticeable with everything cranked at 1440p resolution, where the air-cooled Vega 64 lags the GTX 1080 by 15 percent. Still, Vega 64’s 90fps average is nothing to sneer at.

The $700 GTX 1080 Ti Founders Edition outpunches the $700 Vega 64 liquid-cooled edition by nearly 33 percent. That is something worth raising an eyebrow over.

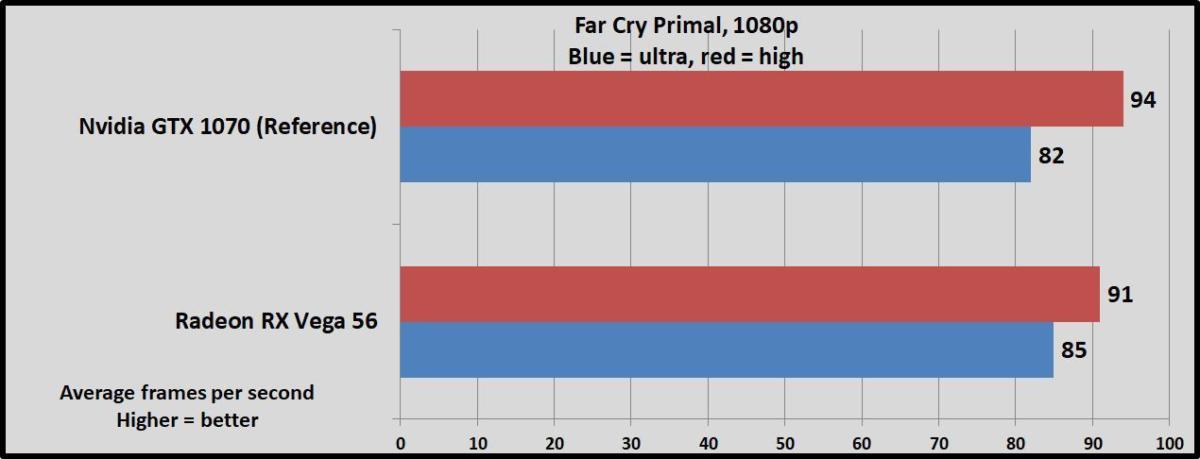

Next page: Far Cry Primal

Far Cry Primal

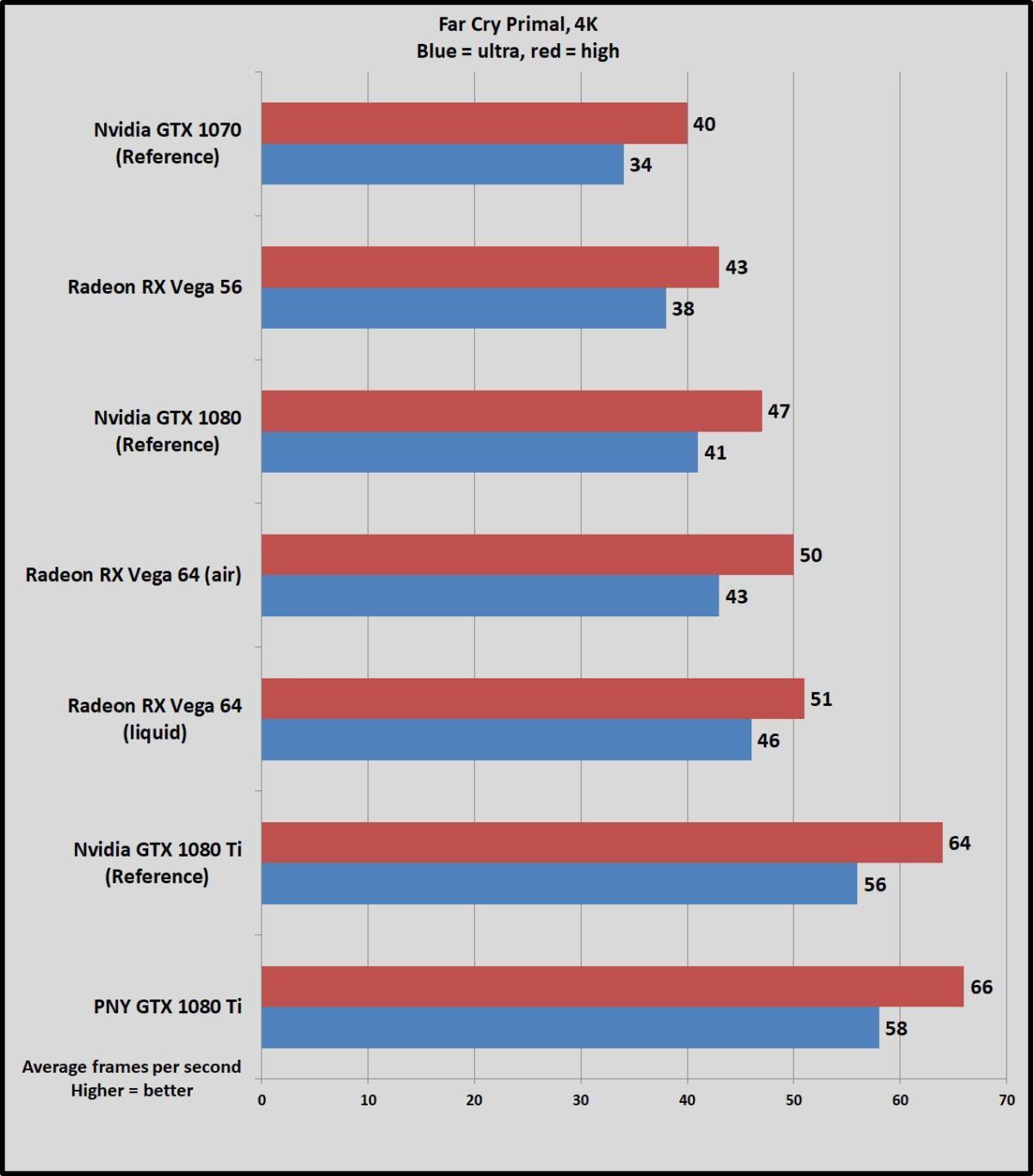

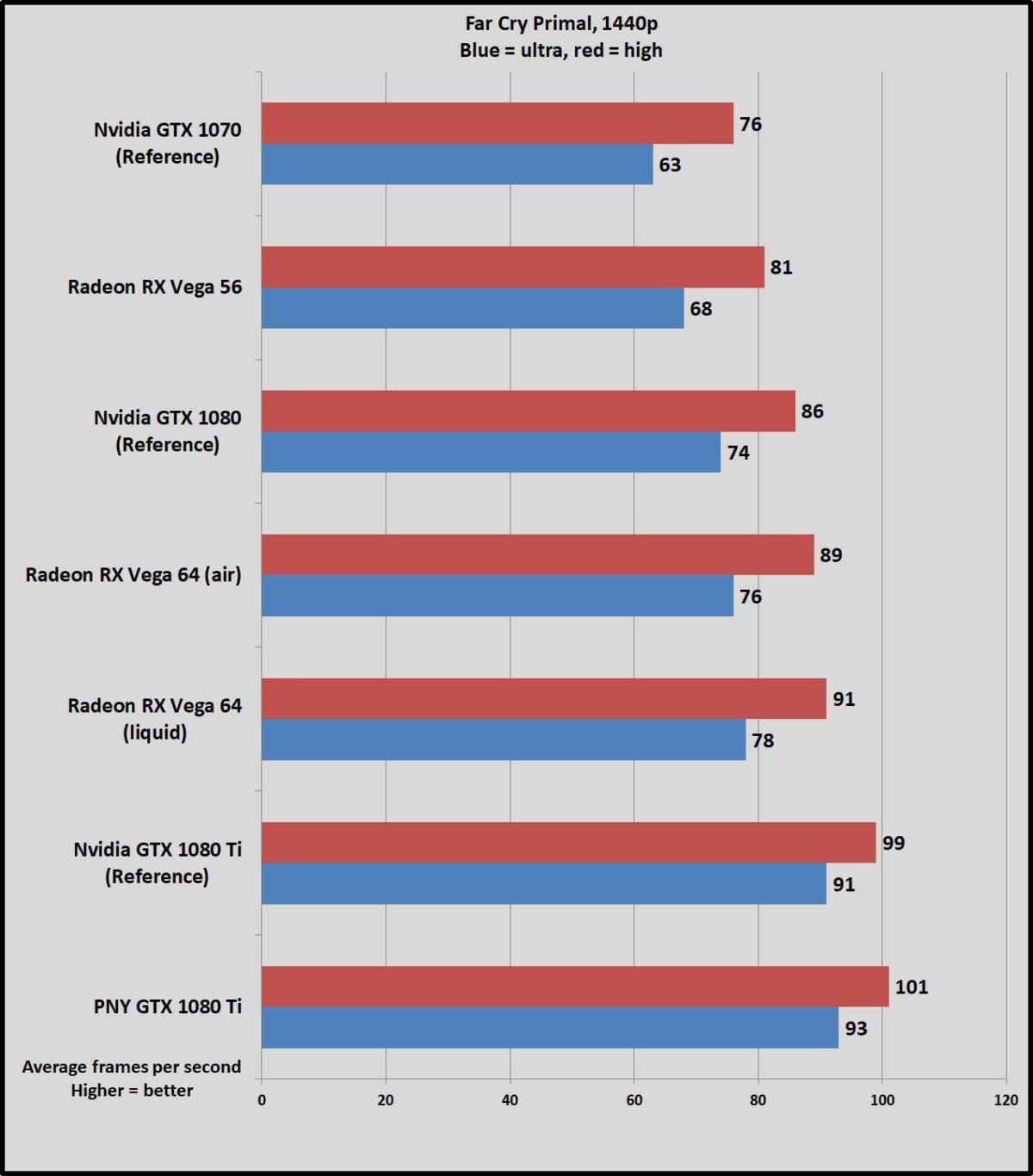

Far Cry Primal is yet another Ubisoft game, but it’s powered by the latest version of the long-running and well-respected Dunia engine. We benchmark the game with the optional Ultra HD texture pack enabled for high-end cards like these.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGBoth the Vega 56 and Vega 64 beat Nvidia’s GeForce equivalent cards here, though the GTX 1070 manages to eke slightly ahead if you go all the way down to High settings at 1080p. That’s not a scenario you’d ever actually want to use, though.

The GTX 1080 Ti’s solid lead here makes it the only card capable of flirting with 4K/60fps.

Next page: Ashes of the Singularity

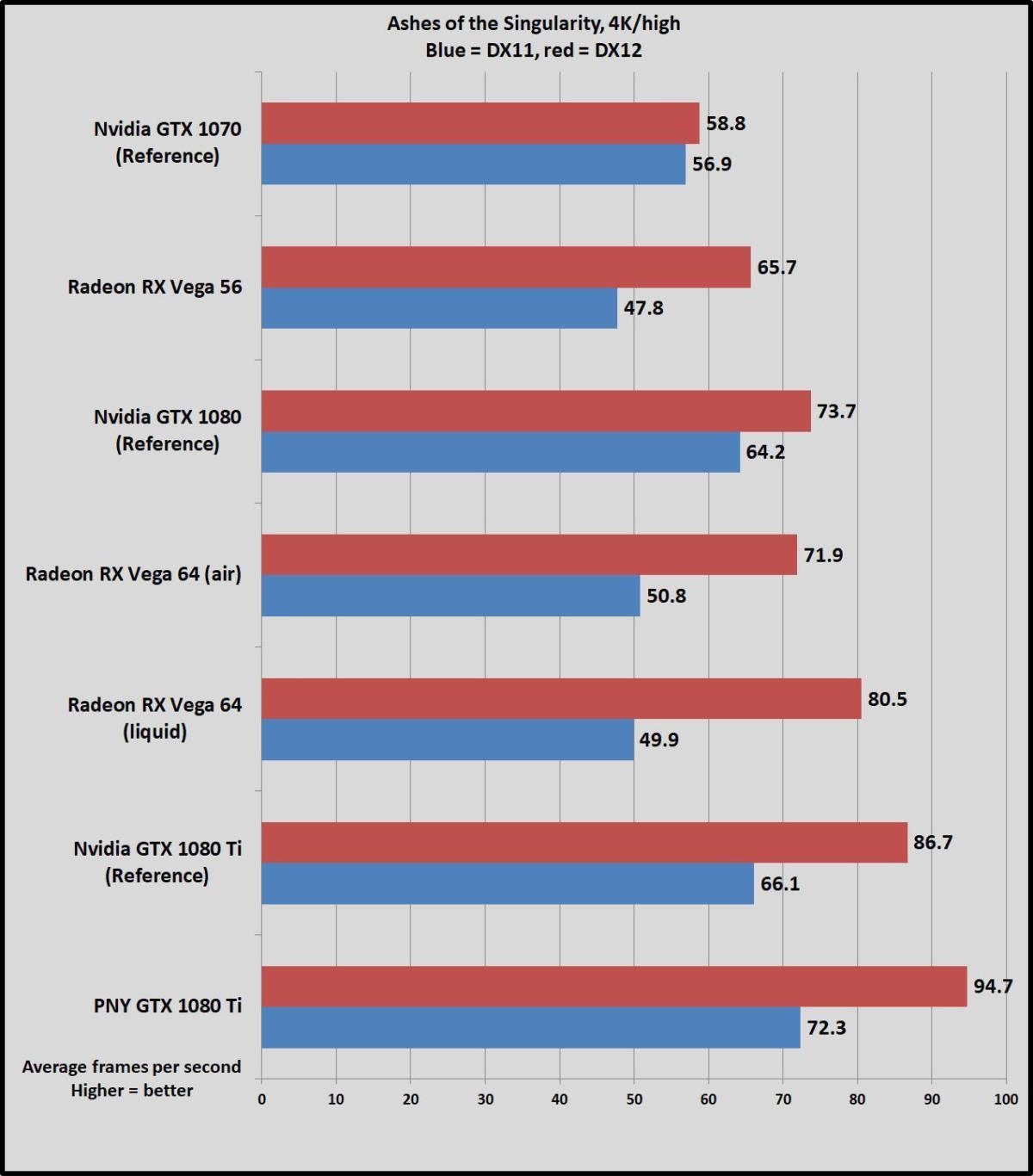

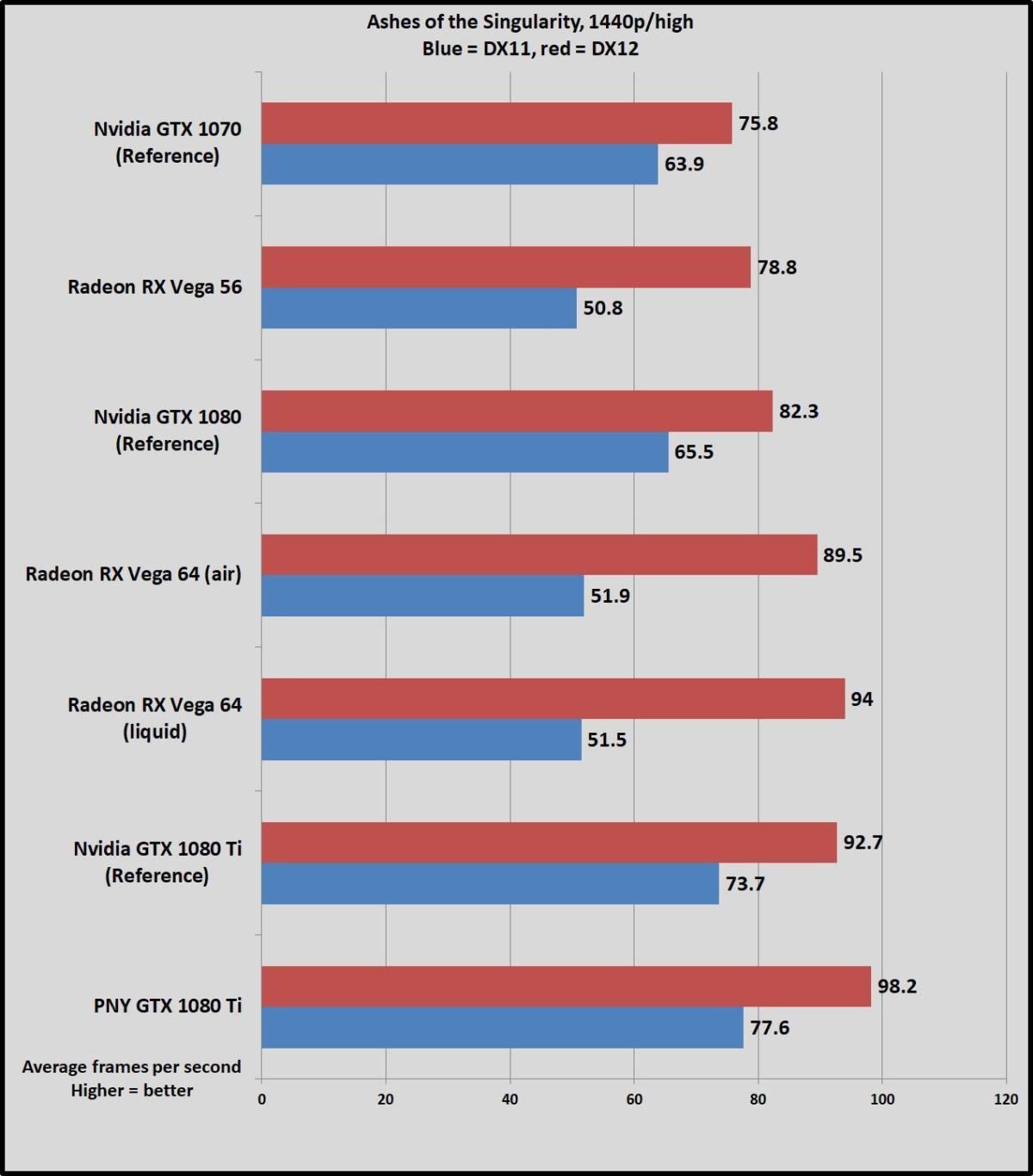

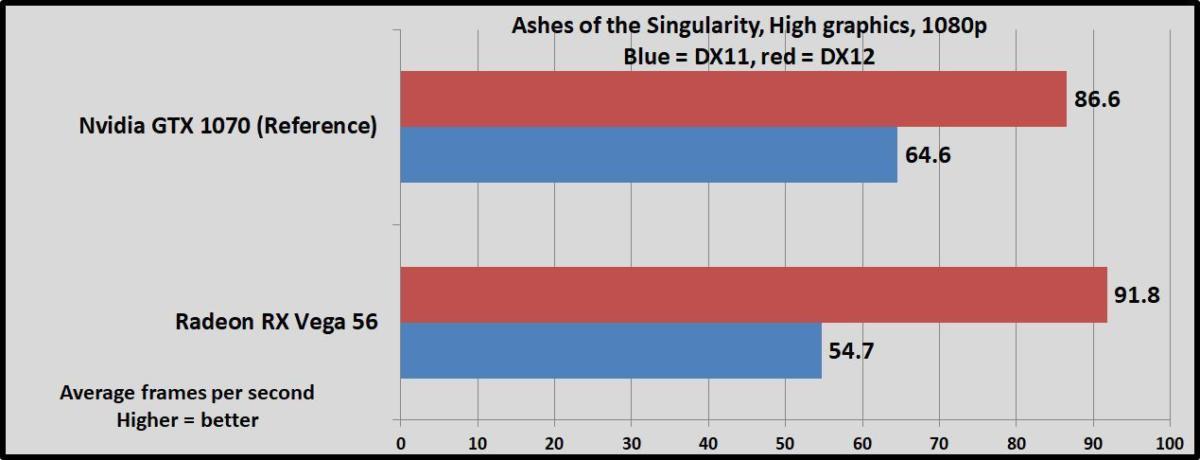

Ashes of the Singularity

Ashes of the Singularity, running on Oxide’s custom Nitrous engine, was an early standard-bearer for DirectX 12, and many months later it’s still the premier game for seeing what next-gen graphics technologies have to offer. Nvidia drivers have greatly improved GeForce performance in Ashes over the past several months. We test the game using the High graphics setting, as the wildly strenuous Crazy and Extreme presets aren’t reflective of real-world usage scenarios.

Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDG Brad Chacos/IDG

Brad Chacos/IDGVega 56 beats the GTX 1070 across the board in DirectX 12 mode. The GTX 1080 and air-cooled Vega 64 are evenly matched at 4K, with the more potent liquid-cooled version opening a 9.25-percent lead over the GeForce card. AMD’s hardware starts pulling ahead at 1440p resolution, though.

At least in DirectX 12: Nvidia’s cards trounce Vega in DirectX 11. That’s worth noting because DX12 is available only in Windows 10. Ashes is a definite victory for AMD for Windows 10 users, while Nvidia is the clear victor for everybody else—though the majority of PC gamers have migrated to Windows 10, according to the Steam hardware survey.

Next page: Power, heat, noise, clock speeds

Power, heat, noise, clock speeds

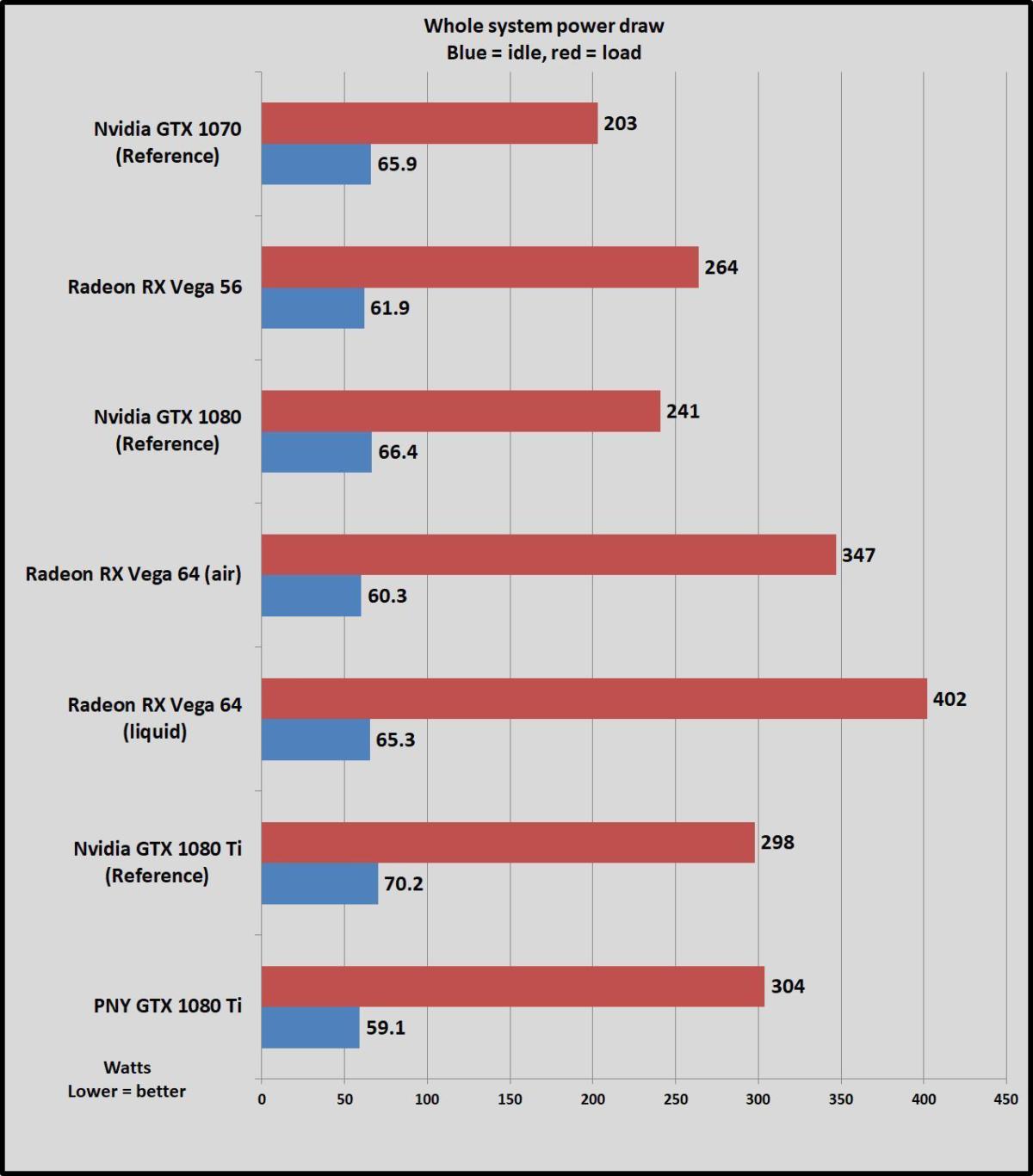

Power

We test power under load by plugging the entire system into a Watts Up meter, running the intensive Division benchmark at 4K resolution, and noting the peak power draw. Idle power is measured after sitting on the Windows desktop for three minutes with no extra programs or processes running.

Brad Chacos/IDG

Brad Chacos/IDGHoly hell, Vega 64 uses a lot of juice. And that’s not even in Turbo mode.

Mentioned in this article

GTX 1080 FTW

Read our reviewPrice When Reviewed:$679.99Best Prices Today:$579 at Walmart | $599.99 at Amazon

Read our reviewPrice When Reviewed:$679.99Best Prices Today:$579 at Walmart | $599.99 at Amazon

AMD spent a lot of time talking up Vega’s power efficiency, but it’s clear the company really cranked on the clock speeds to bring performance in line with the GTX 1080, especially when you consider the power savings that HBM offers over traditional memory. At 347 watts of total system power draw, the air-cooled Vega 64 uses 106W more power than the GTX 1080 Founders Edition—heck, even the mightily overclocked EVGA GTX 1080 FTW only used 248W. The liquid-cooled model draws an astonishing 402W. That’s roughly 100W more than even our overclocked GTX 1080 Ti XLR8 requires.

A silver lining for AMD? Vega’s sky-high thirst for power makes the 60W gap between the GTX 1070 and Vega 56 look far less imposing.

While we didn’t measure it here, Radeon Chill can indeed bring down your power use and temperatures significantly—but only in the whitelisted games, and you have to enable it manually (which you should do)! Frame Rate Target Control can also help reduce GPU load. Seeing these Vega power results, it becomes more clear why AMD invested so much in power-saving software features in recent months.

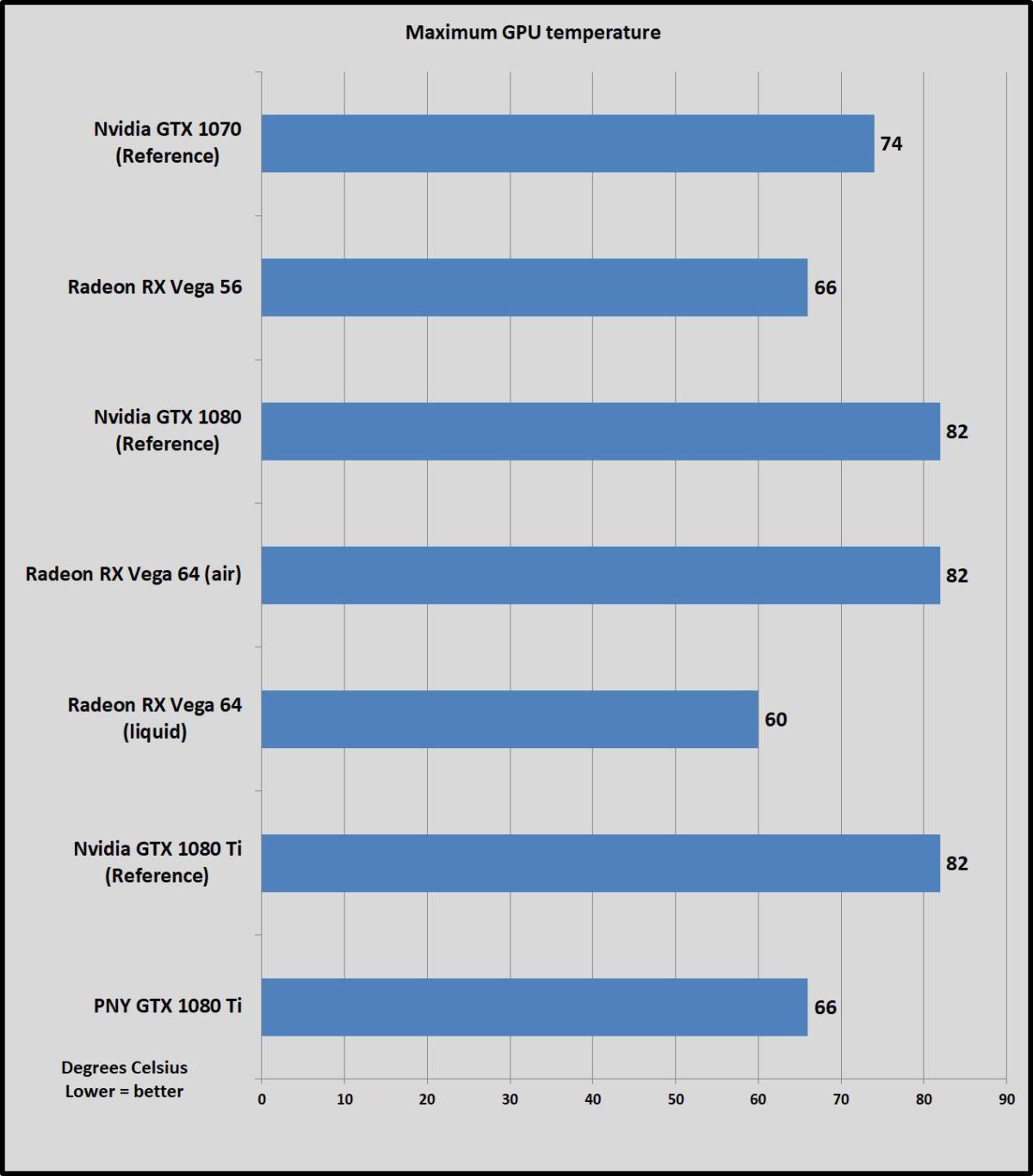

Heat and noise

We test heat during the same intensive Division benchmark at a strenuous 4K resolution, by running SpeedFan in the background and noting the maximum GPU temperature once the run is over.

Brad Chacos/IDG

Brad Chacos/IDGDespite using so much more power than the GTX 1070 Founders Edition, the Vega 56 actually runs much cooler, topping out at 66 degrees Celsius. The air-cooled Vega 56 hits the same 82-degree Celsius max as the GTX 1080 and 1080 Ti Founders Edition cards. AMD’s blower-style reference cooler gets awfully loud under load though, vapor chamber or no.

Speaking of loud, our liquid-cooled Vega 64 suffered from a buzzing coil whine when sitting on menus or the Windows desktop. It goes away in-game, but it isn’t very pleasant. The card hovers between 55 degrees Celsius and its max of 60 degrees Celsius during testing. That’s slightly warmer you’d expect from a liquid-cooled card; the older Fury X never topped 56 degrees Celsius in our benchmarks. It’s also worth noting how that 60-degree-Celsius maximum for the liquid-cooled version isn’t much chillier than the PNY GTX 1080 Ti XLR8’s triple-fan solution, and that GeForce card delivers a whole lot more performance for roughly the same price.

Given Vega’s wild power draw, the liquid-cooled model probably would’ve been more effective with a 240mm radiator rather than the included 120mm version.

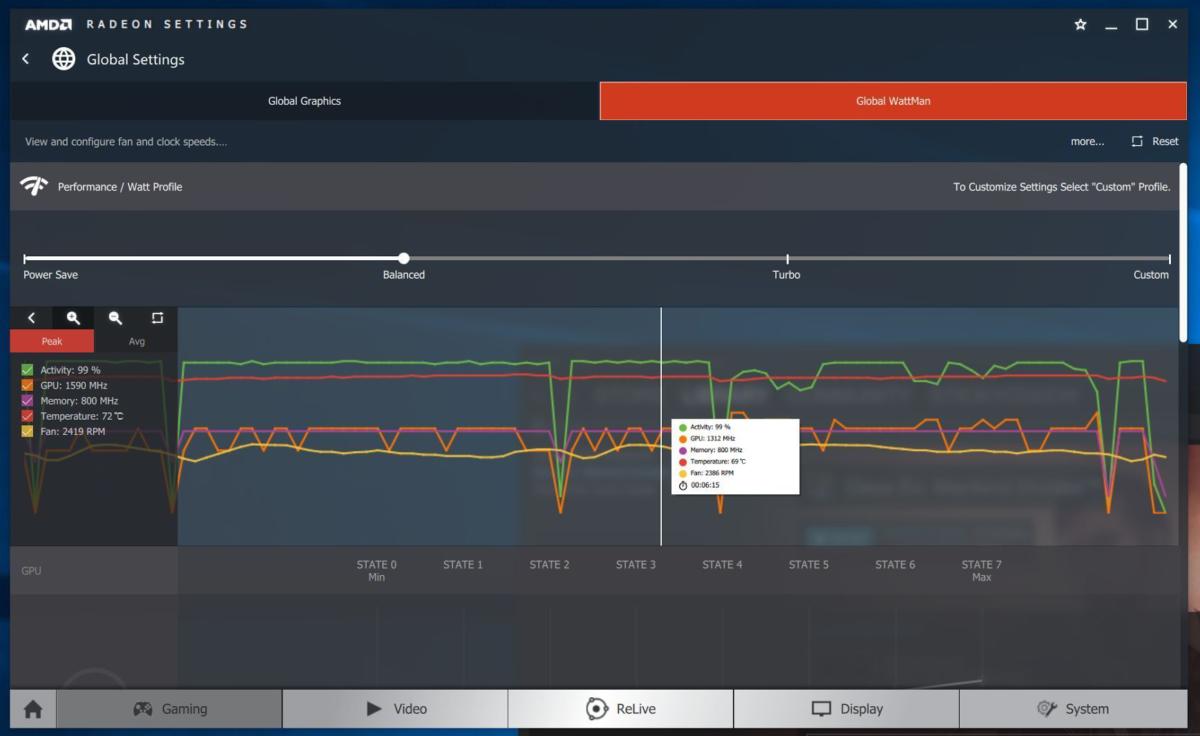

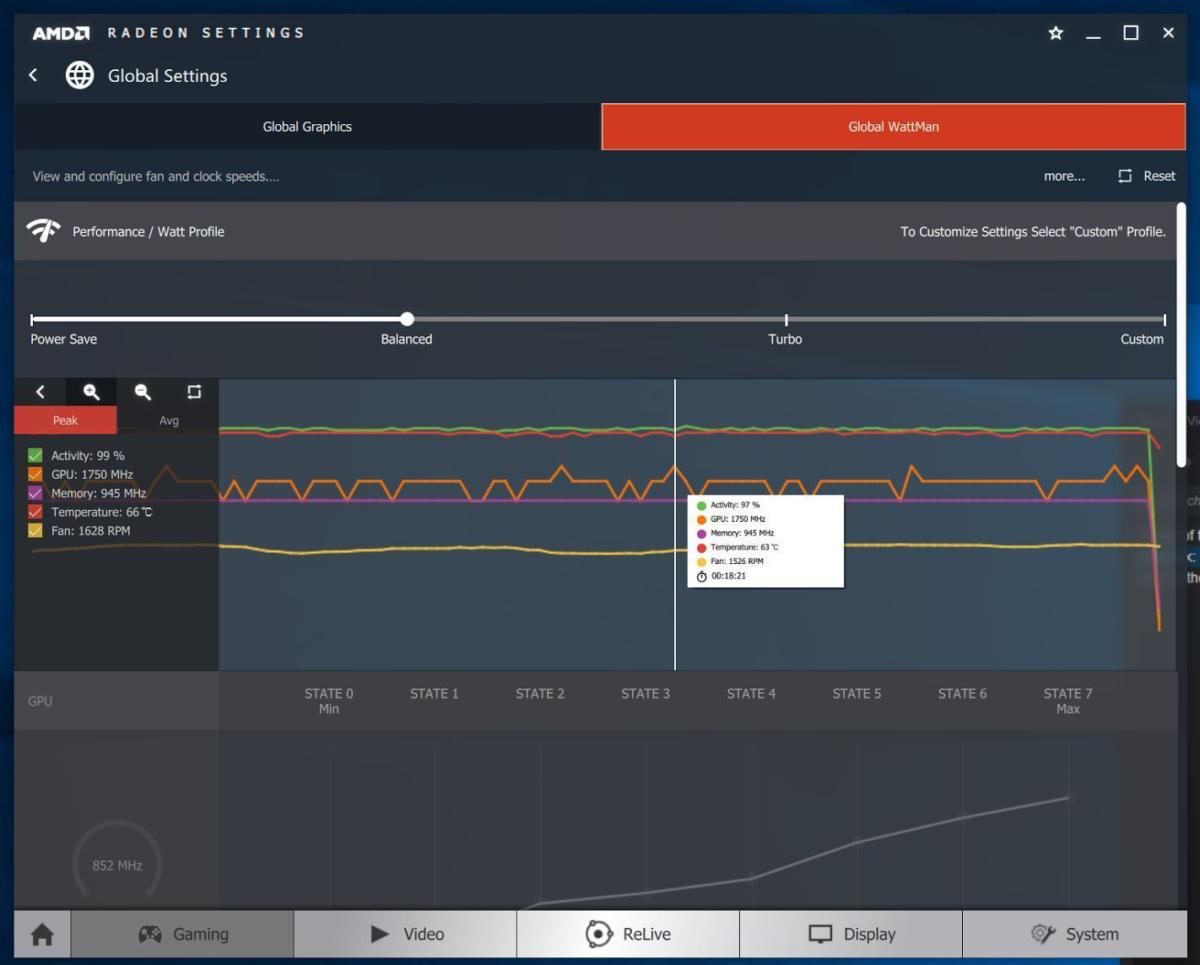

Clock speeds

All of these cards hold their rated boost speeds pretty consistently, with some slight, temporary bumps up or down in performance. Here are screenshots of Radeon Wattman graphs of each card’s performance during Deus Ex: Mankind Divided benchmark runs. You can click on them to enlarge each, with the orange line indicating clock speeds.

The Vega 56 varies between 1,474MHz and 1,312MHz steps.

Brad Chacos/IDG

Brad Chacos/IDGThe Vega 64 usually sticks to its 1,536MHz boost speed.

Brad Chacos/IDG

Brad Chacos/IDGThe liquid-cooled Vega 64 is mostly rock solid at 1,668MHz, but it sometimes dips to 1,560MHz and spikes as high as 1,750MHz.

Brad Chacos/IDG

Brad Chacos/IDGNext page: The FreeSync variable

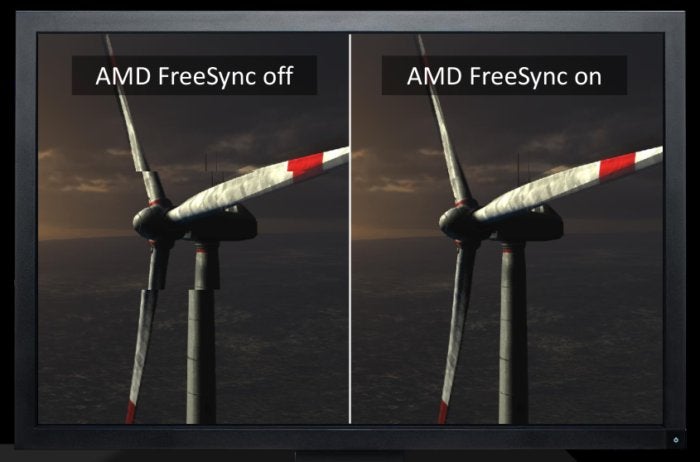

Radeon RX Vega: The FreeSync variable

During pre-launch promotions for Radeon RX Vega, AMD set up blind “taste tests” at fan events. PC gamers got to play Battlefield 1 on 100Hz, 3440×1440 monitors, with one setup using the GTX 1080 and a G-Sync monitor, and another using Vega 64 with a similar FreeSync monitor that cost $300 less.

AMD

AMD Radeon’s Vega “blind taste test” in Budapest.

“Though the Radeon RX Vega + FreeSync (left system) came out on top for most gamers, they said the differences were minimal and couldn’t really tell the difference,” AMD said in the aftermath.

Mentioned in this article

ViewSonic XG2700-4K 27″ 60Hz IPS 4K FreeSync display

Price When Reviewed:$549.99

Price When Reviewed:$549.99

A sizeable chunk of AMD’s Radeon RX Vega reviewers guide is also dedicated to touting FreeSync’s benefits and cost advantage versus G-Sync, with expected performance results in games being boxed inside “FreeSync ranges” in AMD’s charts. And just in case I didn’t get it, AMD also sent along a Viewsonic XG2700-4K display ($550 on Amazon) so I could test FreeSync’s benefits firsthand.

It’s no wonder AMD’s beating this drum so hard. Once you’ve enjoyed the stutter- and tearing-free experience an adaptive-sync monitor provides, it’s painful to go back to a normal monitor. Heck, many Radeon 290/390/Fury-wielding enthusiasts have refused to pick up a GTX 10-series card simply because Nvidia hardware isn’t FreeSync-compatible.

AMD

AMDScreen tearing is gross. FreeSync (and G-Sync) eliminate it.

As we discuss in PCWorld’s comprehensive FreeSync vs. G-Sync explainer, FreeSync’s open nature means there are many more FreeSync monitors available than G-Sync monitors, and the AMD-friendly displays tend to cost much less. Nvidia views G-Sync as a premium add-on for premium monitors and requires display vendors to use a proprietary hardware module. FreeSync is built atop the DisplayPort 1.2 adaptive sync standard, and AMD doesn’t even charge certification or licensing costs. FreeSync was made to spread far and wide, and cheaply. On Newegg right now, G-Sync monitors start at $600, while only two FreeSync monitors cost more than $600.

A great 1080p 144Hz IPS display with wide FreeSync range

Nixeus Vue 24″ 144Hz 1080p FreeSync display

Price When Reviewed:$269.99

Price When Reviewed:$269.99

FreeSync indeed tends to be cheaper, but buying a FreeSync monitor requires a bit more legwork than with G-Sync due to its variability. Color accuracy isn’t certified. FreeSync monitors support adaptive sync only inside of specified refresh rate ranges, and some are awfully tiny. If you fall outside that range, tearing and stuttering returns. That’s an easy thing to do at 4K, because many 4K FreeSync monitors kick in at 40Hz, and Vega has troubles hitting a consistent 40fps in our 4K Deus Ex, Ghost Recon, and Division tests. A FreeSync feature called Low Framerate Compensation (LFC) combats the issue and behaves similarly to G-Sync’s solution, but it’s optional and a lot of monitors don’t have it. The ViewSonic monitor AMD sent along doesn’t, in fact.

Fortunately, AMD’s FreeSync page includes a Monitors tab in a chart at the bottom that lets you see the supported FreeSync ranges of every FreeSync display before you buy. FreeSync’s price advantage can diminish on exceptionally high-end monitors. Do your homework!

Next page: Buying advice and big picture

Radeon RX Vega: Buying advice

So was the wait for Vega worthwhile? It’s complicated, made more so by the fact that cryptocurrency miners have greatly inflated today’s graphics card prices—and Nvidia’s next-gen Volta chips loom in the near future.

Brad Chacos/IDG

Brad Chacos/IDGOne thing’s for certain: The $399 Vega 56 will be the best buy of the bunch when it launches on August 28. It runs cooler than the GTX 1070 Founders Edition and delivers more performance than Nvidia’s card in many games, hanging tight even in titles that favor GeForce hardware. Sure, it uses more power, but not remarkably so. The Vega 56 is also a huge step up over the (theoretically) $240 8GB Radeon RX 580, delivering between 25 percent and 45 percent more performance depending on the game and resolution you’re looking at. The value of FreeSync is alluring indeed if you’re looking to get into 1440p or 1080p/144Hz gaming without breaking the bank.

Speaking of which, current street prices for GTX 1070 cards are another point in Vega 56’s favor, as the cheapest GTX 1070 currently available on Newegg is the MSI GTX 1070 Gaming, for $470. That’s a whopping $120 over the GTX 1070’s $350 MSRP. Fingers crossed miners don’t similarly jack up Vega prices after launch. Considering how large the GPU die is, and how limited HBM2 production appears to be, I don’t expect there to be abundant supplies floating around to combat demand by miners and pent-up gamer demand for Vega.

Pair Vega 64 with FreeSync

AOC Agon AG271QX 27” 1440p FreeSync display

Price When Reviewed:$449.99

Price When Reviewed:$449.99

The $500 air-cooled Vega 64 is a trickier proposition now that GTX 1080 prices are finally starting to settle down around the card’s $500 MSRP. Vega 64 trades blows with the reference GTX 1080 depending on the game you’re playing, but it uses a ton of electricity to do so. The only real reason to buy Vega 64 over a GeForce GTX 1080 is if you plan to pair it with a FreeSync monitor for 1440p or ultrawide gaming at a high refresh rate (like the superb, hassle-free Nixeus EDG 27), or 4K gaming (often sub-60fps).

Affordable adaptive sync or vastly superior power efficiency? Pick your pleasure. There’s no wrong answer… unless you choose Vega and don’t get a FreeSync monitor.

Brad Chacos/IDG

Brad Chacos/IDGMentioned in this article

Gigabyte Aorus GeForce GTX 1080 Ti

Price When Reviewed:$719.99

Price When Reviewed:$719.99

We can’t recommend the liquid-cooled Vega 64 no matter how gorgeous it looks. The card’s performance results leave me very interested in seeing what overclocked Vega 64 cards with custom cooling solutions can do in the future, but at this time the liquid-cooled Vega 64 has too many strikes against it. The coil whine isn’t guaranteed to affect every unit, yet even taking that off the table, the awkward tubing, wild power draw, essentially GTX 1080-level performance, and the fact that it’s limited to a $699 “Radeon Aqua Pack” edition render it undesirable for the most part. It’d be much easier to swallow if it were available in a standalone version for $100 less. As-is, the GeForce GTX 1080 Ti blows it away in performance for roughly the same price.

In fact, give the Radeon Pack editions of all these cards a hard pass unless you already planned to pick up both Prey and Wolfenstein II: The New Colossus (a $120 value) at full price, build a swanky Ryzen 7 system with a premium motherboard, or want a swanky $950 Samsung monitor (that blows away FreeSync’s value proposition) for $750—and all only at select retailers, as part of a single massive purchase. Again, our Radeon Pack explainer dives into the details, but the bundles don’t really make sense for most buyers the way they’re configured, and the $100 surcharge pushes the Vega cards into the “not worth it” range unless you plan to put the extras to good use.

Radeon RX Vega: The big picture

Brad Chacos/IDG

Brad Chacos/IDGTaking a step back, it’s great to see AMD finally return to enthusiast-class graphics cards. Dedicated FreeSync owners finally have worthy high-end gaming options to drive their displays! Competition is back!

But it’s hard not to feel a bit let down by Vega on the gaming front.

Nvidia launched the Pascal GPU-based GTX 10-series 15 months ago, in May 2016. It’s taken AMD this long to release graphics cards that merely compete with the GTX 1070 and GTX 1080, and Vega needs a far larger die size (486mm vs. 314mm) and far more power to do even that. The Titan Xp and GTX 1080 Ti still reign comfortably supreme in performance. This is after AMD mocked Nvidia’s forthcoming Volta GPU architecture during a video revealed at CES in January, stoking hopes that Vega would be an utter beast.

AMD

AMDEight months later, Vega can only battle with the aging GTX 10-series, and Nvidia’s already showed off Volta in its ultimate-data-center form. Volta-based GeForce graphics cards can feasibly drop at any point and ruin Vega’s fun, though Nvidia may wait until the new year. Vega 56 and Vega 64 hold up pretty well in raw performance against Nvidia’s graphics cards today, but you have to wonder how they’ll manage when fresh Volta cards arrive.

Taking a step even further back, AMD’s Vega architecture works in important features—like FP16 and the technically stunning high-bandwidth cache controller—that seem potentially interesting for gaming in the future but designed more to earn the company a foothold in data centers and machine-learning scenarios. That’s where the real money is these days, so the focus there makes sense. Nvidia creates different GPUs for different market segments, but the much smaller AMD simply can’t afford to do so.

Radeon RX Vega ain’t bad, but it ain’t mind-blowing despite some nifty tricks, and it’s awfully late to the game. Here’s hoping its successor, Navi, will be able to push the gaming envelope in 2018…and that the wait won’t be as excruciatingly long as it’s been for Vega.

Best Prices Today: Radeon RX Vega 56

RetailerPrice